What is the point of higher education?

By Doug Ward

The future of colleges and universities is neither clear nor certain.

The current model fails far too many students, and creating a better one will require sometimes painful change. As I’ve written before, though, many of us have approached change with a sense of urgency, providing ideas for the future for a university that will better serve students and student learning.

The accompanying video is based on a presentation I gave at a recent Red Hot Research session at KU about the future of the university. It synthesizes many ideas I’ve written about in Bloom’s Sixth, elaborates on a recent post about the university climate study, and builds on ideas I explored in an essay for Inside Higher Ed.

The takeaway: We simply must value innovative teaching and meaningful service in the university rewards system if we have any hope of effecting change. Research is important, but not to the exclusion of our undergraduate students.

Doug Ward is the associate director of the Center for Teaching Excellence and an associate professor of journalism. You can follow him on Twitter @kuediting.

KU to receive a third of $120 million in federal earmarks going to higher ed in Kansas

By Doug Ward

Colleges and universities in Kansas will receive more than $100 million this year from congressional earmarks in the federal budget, according to an analysis by Inside Higher Ed.

That places Kansas second among states in the amount earmarked for higher education, according to Inside Higher Ed. Those statistics don't include $22 million for the Kansas National Security Innovation Center on West Campus, though. When those funds are added, Kansas ranks first in the amount of earmarks for higher education ($120.8 million), followed by Arkansas ($106 million), and Mississippi ($92.4 million).

KU will receive more than a third of the money flowing to Kansas.That includes $1.6 million for a new Veterans Legal Support Clinic at the law school, and $10 million each for facilities and equipment at the KU Medical Center and the KU Hospital.

Nationwide, 707 projects at 483 colleges and universities will receive $1.3 billion this year through earmarks, Inside Higher Ed said. In Kansas, the money will go to 17 projects, with some receiving funds through multiple earmarks.

All but three of the earmarks for Kansas higher education projects were added by Sen. Jerry Moran. Rep. Jake LaTurner earmarked nearly $3 million each for projects at Kansas City Kansas Community College and Tabor College in Hillsboro, and Rep. Sharice Davids earmarked $150,000 for training vehicles for the Johnson County Regional Police Academy.

Kansas State’s Salina campus will receive $33.5 million for an aerospace training and innovation hub. K-State’s main campus will receive an additional $7 million, mostly for the National Bio and Agro-Defense Facility.

Pittsburg State will receive $5 million for a STEM ecosystem project, and Fort Hays State will receive $3 million for what is listed simply as equipment and technology. Four private colleges will share more than $7 million for various projects, and community colleges will receive $5.6 million.

2024 federal earmarks for higher education in Kansas

| Institution | $ amount | Purpose |

| K-State Salina | 28,000,000 | Aerospace training and innovation hub |

| KU | 22,000,000 | Kansas National Security Innovation Center |

| Wichita State | 10,000,000 | National Institute for Aviation Research tech and equipment |

| KU Medical Center | 10,000,000 | Cancer center facilities and equipment |

| KU Hospital | 10,000,000 | Facilities and equipment |

| Wichita State | 5,000,000 | National Institute for Aviation Research tech and equipment |

| Pittsburg State | 5,000,000 | STEM ecosystem |

| K-State Salina | 4,000,000 | Equipment for aerospace hub |

| K-State | 4,000,000 | Facilities and equipment for biomanufacturing training and education |

| Fort Hays State | 3,000,000 | Equipment and technology |

| K-State | 3,000,000 | Equipment and facilities |

| KCK Community College | 2,986,469 | Downtown community education center dual enrollment program |

| Tabor College | 2,858,520 | Central Kansas Business Studies and Entrepreneurial Center |

| McPherson College | 2,100,000 | Health care education, equipment, and technology |

| KU | 1,600,000 | Veterans Legal Support Clinic |

| K-State Salina | 1,500,000 | Flight simulator |

| Newman University | 1,200,000 | Agribusiness education, equipment, and support |

| Seward County Community College | 1,200,000 | Equipment and technology |

| Benedictine College | 1,000,000 | Equipment |

| Wichita State | 1,000,000 | Campus of Applied Sciences and Technology, aviation education, equipment, technology |

| Ottawa University | 900,000 | Equipment |

| Cowley County Community College | 264,000 | Welding education and equipment |

| Johnson County Community College | 150,000 | Training vehicles for Johnson County Regional Police Academy |

| Total | 120,758,989 |

A return of earmarks

Congress stopped earmarks, which are officially known as congressionally directed spending or community project funding, in 2011 amid complaints of misuse. They were revived in 2021 with new rules intended to improve transparency and limit overall spending. They are limited to spending on nonprofits, and local, state, and tribal governments. Earmarks accounted for $12 billion of the $460 billion budget passed in March, according to Marketplace.

Earmarks have long been criticized as wasteful spending and corruption, with one organization issuing an annual Congressional Pig Book Summary (a reference to pork-barrel politics) of how the money is used. Others argue, though, that earmarks are more transparent than other forms of spending because specific projects and their congressional sponsors are made public. They also benefit projects that might otherwise be overlooked, empowering stakeholders to speak directly with congressional leaders and making leaders more aware of local needs.

Without a doubt, though, they are steeped in the federal political process and rely on the clout individual lawmakers have on committees that approve the earmarks. That has put Moran, who has been in the Senate since 2010, in a good position through his seats on the Appropriations Committee, the Commerce Science, and Transportation Committee, and the Veterans Affairs Committee.

What does this mean for higher education?

It’s heartening that higher education in Kansas will see an infusion of more than $100 million in federal funding.

Earmarks generally go to high-profile projects that promise new jobs, that promise new ways of addressing big challenges (security, health care), or that have drawn wide attention (cybercrimes, drones, STEM education). A Brookings Institution analysis found that Republican lawmakers like Moran generally put forth earmarks that have symbolic significance, “emphasizing American imagery and values.” In earmarks for higher education in Kansas over the past two years, that includes things like job training, biotechnology, library renovation, support for veterans, and research into aviation, cancer, alzheimer’s, and manufacturing.

One of the downsides of earmarks, at least in terms of university financial stability, is that they are one-time grants for specific projects and do nothing to address shortfalls in existing college and university budgets or the future budgets for newly created operations. They also require lawmakers who support higher education, who have the political influence to sway spending decisions, and who are willing to work within the existing political structure. For now, at least, that puts Kansas in a good position.

Doug Ward is an associate director at the Center for Teaching Excellence and an associate professor of journalism and mass communications.

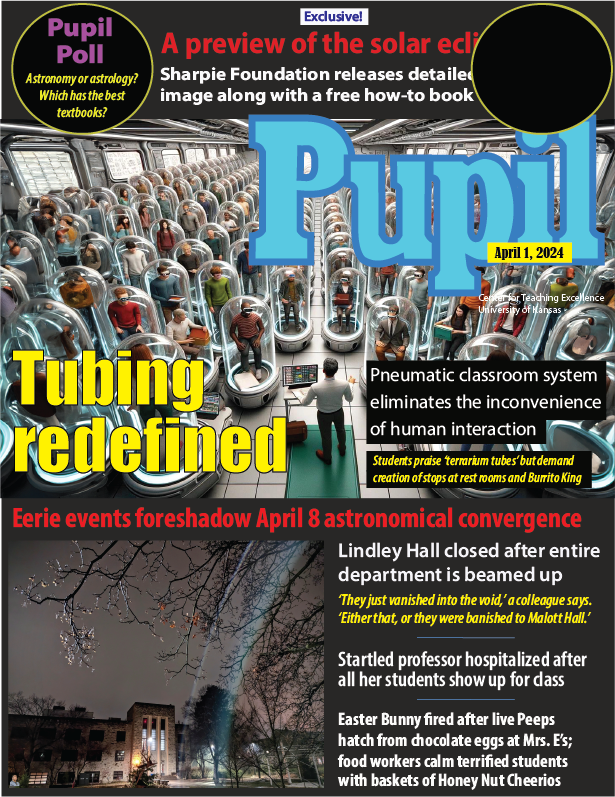

Everything you need to know for April Fools' Day

By Doug Ward

A short history lesson:

April Fools’ Day originated in 1920, when Joseph C. McCanles (who was only vaguely related to the infamous 19th-century outlaw gang) ordered the KU marching band (then known as the Beak Brigade) to line up for practice on McCook Field (near the site of the current Great Dismantling).

It was April 1, and McCanles was not aware that the lead piccolo player, Herbert “Growling Dog” McGillicuddy, had conspired with the not-yet-legendary Phog Allen to play a practical joke.

McCanles, standing atop a peach basket, raised his baton and shouted, “March!”

Band members remained in place.

“March!” McCanles ordered again.

The band stood and stared.

Then McGillicuddy began playing “Yankee Doodle” on his piccolo and Allen, disguised in a Beak Brigade uniform, raised a drum stick (the kind associated with a drum, not a turkey) and joined the rest of the band in shouting: “It’s April, fool!”

McCandles fell off his peach basket in laughter, and a tradition was born.

Either that, or April Fools’ Day was created in France, or maybe in ancient Rome, or possibly in India. We still have some checking to do.

Regardless, we at CTE want you to know that we take our April Fools seriously – so seriously in fact that we have published the latest issue of Pupil magazine just in time for April Fools' Day.

As always, Pupil is rock-chalk full of news that you simply must know. It is best read with “The Washington Post March” playing in the background. We don’t like to be overly prescriptive, though, especially with all the strange happenings brought on by an impending solar eclipse.

Doug Ward is an associate director of the Center for Teaching Excellence and an associate professor of journalism and mass communications.

Why talking about AI has become like talking about sex

By Doug Ward

We need to talk.

Yes, the conversation will make you uncomfortable. It’s important, though. Your students need your guidance, and if you avoid talking about this, they will act anyway – usually in unsafe ways that could have embarrassing and potentially harmful consequences.

So yes, we need to talk about generative artificial intelligence.

Consider the conversation analogous to a parent’s conversation with a teenager about sex. Susan Marshall, a teaching professor in psychology, made that wonderful analogy recently in the CTE Online Working Group, and it seems to perfectly capture faculty members’ reluctance to talk about generative AI.

Like other faculty members, Marshall has found that AI creates solid answers to questions she poses on assignments, quizzes, and exams. That, she said, makes her feel like she shouldn't talk about generative AI with students because more information might encourage cheating. She knows that is silly, she said, but talking about AI seems as difficult as talking about condom use.

It can, but as Marshall said, we simply must have those conversations.

Sex ed, AI ed

Having frank conversations with teenagers about sex, sexually transmitted diseases, and birth control can seem like encouragement to go out and do whatever they feel like doing. Talking with teens about sex, though, does not increase their likelihood of having sex. Just the opposite. As the CDC reports: “Studies have shown that teens who report talking with their parents about sex are more likely to delay having sex and to use condoms when they do have sex.”

Similarly, researchers have found that generative AI has not increased cheating. (I haven't found any research on talking about AI.)

That hasn't assuaged concern among faculty members. A recent Chronicle of Higher Education headline captures the prevailing mood: “ChatGPT Has Everyone Freaking Out About Cheating.”

When we freak out, we often make bad decisions. So rather than talking with students about generative AI or adding material about the ethics of generative AI, many faculty members chose to ignore it. Or ban it. Or use AI detectors as a hammer to punish work that seems suspicious.

All that has done is make students reluctant to talk about AI. Many of them still use it. The detectors, which were never intended as evidence of cheating and which have been shown to have biases toward some students, have also led to dubious accusations of academic misconduct. Not surprisingly, that has made students further reluctant to talk about AI or even to ask questions about AI policies, lest the instructor single them out as potential cheaters.

Without solid information or guidance, students talk to their peers about AI. Or they look up information online about how to use AI on assignments. Or they simply create accounts and, often oblivious and unprotected, experiment with generative AI on their own.

So yes, we need to talk. We need to talk with students about the strengths and weaknesses of generative AI. We need to talk about the ethics of generative AI. We need to talk about privacy and responsibility. We need to talk about skills and learning. We need to talk about why we are doing what we are doing in our classes and how it relates to students’ future.

If you aren’t sure how to talk with students about AI, draw on the many resources we have made available. Encourage students to ask questions about AI use in class. Make it clear when they may or may not use generative AI on assignments. Talk about AI often. Take away the stigma. Encourage forthright discussions.

Yes, that may make you and students uncomfortable at times. Have the talk anyway. Silence serves no one.

JSTOR offers assistance from generative AI

Ithaka S+R has released a generative AI research tool for its JSTOR database. The tool, which is in beta testing, summarizes and highlights key areas of documents, and allows users to ask questions about content. It also suggests related materials to consider. You can read more about the tool in an FAQ section on the JSTOR site.

Useful lists of AI-related tools for academia

While we are talking about Ithaka S+R, the organization has created an excellent overview of AI-related tools for higher education, assigning them to one of three categories: discovery, understanding, and creation. It also provides much the same information in list form on its site and on a Google Doc. In the overview, an Ithaka analyst and a program manager offer an interesting take on the future of generative AI:

These tools point towards a future in which the distinction between the initial act of identifying and accessing relevant sources and the subsequent work of reading and digesting those sources is irretrievably blurred if not rendered irrelevant. For organizations providing access to paywalled content, it seems likely that many of these new tools will soon become baseline features of their user interface and presage an era where that content is less “discovered” than queried and in which secondary sources are consumed largely through tertiary summaries.

Preparing for the next wave of AI

Dan Fitzpatrick, who writes and speaks about AI in education, frequently emphasizes the inevitable technological changes that educators must face. In his weekend email newsletter, he wrote about how wearable technology, coupled with generative AI, could soon provide personalized learning in ways that make traditional education obsolete. His question: “What will schools, colleges and universities offer that is different?”

In another post, he writes that many instructors and classes are stuck in the past, relying on outdated explanations from textbooks and worksheets. “It's no wonder that despite our best efforts, engagement can be a struggle,” he says, adding: “This isn't about robots replacing teachers. It's about kids becoming authors of their own learning.”

Introducing generative AI, the student

Two professors at the University of Nevada-Reno have added ChatGPT as a student in an online education course as part of a gamification approach to learning. The game immerses students in the environment of the science fiction novel and movie Dune, with students competing against ChatGPT on tasks related to language acquisition, according to the university.

That AI student has company. Ferris State University in Michigan has created two virtual students that will choose majors, join online classes, complete assignments, participate in discussion boards, and gather information about courses, Inside Higher Ed Reports. The university, which is working with a Grand Rapids company called Yeti CGI on developing the artificial intelligence software for the project, said the virtual students’ movement through programs would help them better understand how to help real students, according to Michigan Live. Ferris State is also using the experiment to promote its undergraduate AI program.

Doug Ward is associate director of the Center for Teaching Excellence and an associate professor of journalism and mass communications.

Academic mindset and student attendance

Something has been happening with class attendance. Actually, there are several somethings, which I’ll get to shortly. First, though, consider, this:

- Since the start of the pandemic, many students have treated class attendance as optional, making discussion and group interaction difficult.

- Online classes tend to fill quickly, and students who enroll in physical classes often ask for an option to “attend” via a video connection.

- Many K-12 schools report record rates of absences. Students from low-income families are especially likely to miss class, according to the Hechinger Report. In many cases, Hechinger says, parents have lost trust in school and don’t see it as a priority.

The first two points are anecdotal, but faculty nationwide have reported drops in attendance. This spring, some KU instructors say that students have been eager to participate in class, perhaps more so than at any time since the pandemic. In other cases, though, attendance remains spotty.

The first two points are anecdotal, but faculty nationwide have reported drops in attendance. This spring, some KU instructors say that students have been eager to participate in class, perhaps more so than at any time since the pandemic. In other cases, though, attendance remains spotty.

So what’s going on?

Here are a few observations:

- Instructors became more flexible during the pandemic, and students found that they didn’t need to attend class to succeed. They have continued to expect that same flexibility.

- As college grew more expensive, some students began seeing a degree as just another consumer product. They have long been told that a degree leads to higher incomes (which it does, although less so than it once did), so the degree (not the work along the way) becomes the focus. A 2010 study, for example, said that students who see education as a product are more likely “to feel entitled to receive positive outcomes from the university; they are not, however, any more likely to be involved in their education.”

- Many instructors say that a KU attendance policy approved last year has complicated things. That policy was intended to provide flexibility for students who have legitimate reasons for missing class. Many students and faculty have taken that to mean nearly any absence should be excused.

Broader trends are in play, as well:

- Many students in their teens and 20s feel that they “lost something in the pandemic,” as Time magazine describes it. Rather than building social networks and engaging with the world, they were forced to distance themselves. As a result, the “pandemic produced a social famine, and its after-effects persist,” Eric Klinenberg, a professor at New York University, writes in the Time article.

- Many students continue to struggle with depression, anxiety and other mental health issues, with 50% to 65% saying they frequently or always feel stressed, anxious or overwhelmed, according to a recent study.

A reassertion of independence

Students have also reasserted their independence as instructors have revised attendance policies and stipulated the importance of participation. A Fall 2022 opinion piece in the University Daily Kansan expressed a common sentiment.

“If professors make every class useful and engaging, then students who value their academic and future success will show up and be present in the learning,” Natalie Terranova, a journalism student, wrote in the Kansan. “Professors have a responsibility to the students to teach, but the students have a responsibility to themselves to prioritize what is most important to them.”

“If professors make every class useful and engaging, then students who value their academic and future success will show up and be present in the learning,” Natalie Terranova, a journalism student, wrote in the Kansan. “Professors have a responsibility to the students to teach, but the students have a responsibility to themselves to prioritize what is most important to them.”

She’s right, of course, and her peers at many other student newspapers have made much the same argument. We all make choices about where to devote our time. If something is useful and important, we make time for it. If it isn’t, we don’t. And though students have long sought to declare their independence during their college years, their experiences during the pandemic seem to have made many of them more comfortable skipping class, seeing that as a right.

At the same time, faculty have come under increasing pressure to help students succeed. If too many students fail or withdraw, the instructor is often blamed. Many instructors, in turn, have made class attendance a component of students’ grades, with good reason. Considerable research suggests that students who attend class get better grades. Class is also part of a structure that improves learning, and a recent study says that students who commit to attending class are more likely to show up.

A high school teacher’s observations

A recent Substack article by a high school teacher offered some observations about student behavior that further illuminate the challenges in attendance. That teacher, Nick Potkalitsky, who is also an education consultant in Ohio, says students are still stressed, lonely, and sometimes bitter about what they missed out on during the pandemic. They have trouble concentrating and require several reminders to focus on a task at hand. With more complex tasks, they need more scaffolding, direction, and oversight than they did before the pandemic.

He offered some additional insights from his interactions with students:

- They struggle to connect in person. Students were dependent on technology “for almost the entirety of their social, academic, and personal lives” during the pandemic, Potkalitsky writes. “Students hunger for connection,” he says, but they struggle to connect in person. If they don’t already belong to an online community, the strong connections among those communities make it difficult for new members to fit in.

- They dislike classrooms, where they often struggle to stay focused. They gain energy from playgrounds, parks, hiking paths, and other outdoor settings that allow them to move.

- They crave immersion and autonomy. They like to immerse themselves in a subject, something he attributes to social media. “When school does not and cannot provide these kinds of stimulation, many students disengage and await the next opportunity to use their handheld devices,” he writes.

- They “are experiencing a crisis in trust in authorities and themselves.” They chafe at the idea of school returning to “normal,” and their wariness has been reinforced by schools’ clumsy response to generative AI. “This generation knows that it needs guidance, but desires the kind of assistance that empowers,” Potkalitsky says

Yes, those are high school students, but they will soon be college freshmen. They also exhibit many of the same behaviors faculty have observed of KU students.

Jenny Darroch, dean of business at Miami University of Ohio, writes in Inside Higher Ed that faculty and administrators need “to recognize that today’s students engage differently — and did so before the pandemic. They expect to be recognized for the knowledge they have and their ability to self-direct as they learn and grow.”

Clearly, student attitudes, expectations, skills, needs, and behaviors are changing. Attendance is perhaps just the most visible place where we see those changes. Many – perhaps most – students care deeply about learning and take class attendance seriously. Many don’t, though, and the challenges of addressing that behavior are unlikely to fade anytime soon.

We have much work to do.

Need help? At CTE, we have provided advice about motivating students, balancing flexibility and structure, and using active learning and group work to make classes more engaging and to make the value of attending class more apparent.

Briefly …

- Online enrollment remains strong. A new analysis of federal data shows that enrollment in online courses remains strong even as enrollment in many in-person courses declines, the Hechinger Report says. That trend certainly holds true at KU, where the number of credit hours generated by online courses rose 17% in Fall 2023 compared with Fall 2022. The Fall 2023 totals are 49% higher than those in Fall 2019, the semester before the pandemic began in the U.S.

- An AI pilot through NSF. The National Science Foundation has begun a pilot of what it calls the National Artificial Intelligence Resource. It describes the project as “a concept for a national infrastructure that connects U.S. researchers to computational, data, software, model and training resources they need to participate in AI research.” NSF is working on the pilot with 10 federal agencies and 25 organizations (mostly technology companies). You can contribute your thoughts through a survey for faculty, researchers, and students. The survey is available until March 8.

Doug Ward is an associate director of the Center for Teaching Excellence and an associate professor of journalism and mass communications at the University of Kansas.

Enrollment trends suggest a changing educational landscape

KU’s big jump in freshman enrollment this academic year ran counter to broader trends in higher education.

Around the country, college enrollment has been trending downward (although there was a slight increase in 2023), many campuses have been closing or consolidating, and a lower birthrate after the 2008-09 recession looms in what has become known as the “enrollment cliff.” That is, with fewer births, there will soon be fewer students graduating from high school and thus fewer potential college applicants.

Even so, KU was one of only 10 flagship universities where overall enrollment declined in the 2010s, according to a Chronicle of Higher Education analysis. In the fall, though, freshman enrollment increased 18%, to 5,259, the largest freshman class ever. If current trends continue, there may be another growth spurt next year. But why?

Flagships as backups

The university has credited the increase to reputation, recruitment strategies, increases in financial aid, and an improved football team. I have no doubt that recruitment strategies and financial aid played a significant role, and reputation always matters. I look for broader (often cultural) trends, though. Jeff Selingo, who writes about admissions, innovation, and the future of higher education, offers some possible explanations in his latest email newsletter. Selingo argues that many students, especially those in the top 5% to 10% of incomes, are going to state flagship universities if they don't get into top-ranked schools. He writes:

What I’m finding in my book research is that some families are increasingly skipping over this next ring of institutions from the very top because they don’t get good offers of merit aid. So, instead, the families chase dollars from a set of institutions deeper in the rankings or the kid heads off to an honors college at a flagship public with a low net price (sometimes zero) and lots of perks, like early access to course registration and sponsored research projects with faculty.

This idea of let’s try for Ivy U., and then if not, State U. has been common in some places like Georgia and Florida for decades ...

He highlights another trend that is certainly affecting KU: out-of-state enrollment growing faster than in-state enrollment.

“Nearly every public flagship enrolled a smaller share of freshmen from within their states in 2022 than they did two decades earlier,” Selingo said, citing a Chronicle analysis of data from the Department of Education.

At KU, the percentage of freshmen from Kansas fell to perhaps its lowest ever (56%) in Fall 2023, according to data from Analytics, Institutional Research, and Effectiveness. That is a decline of 13.1 percentage points from 2002, when in-state students made up 69.1% of the freshman class, according to the Chronicle analysis.

The number of Kansas students enrolled as freshmen at KU actually rose to at least a 10-year high, but the number of out-of-state students rose even more, with the university attracting more students from such states as Missouri, Nebraska, Illinois, Iowa, Colorado, Minnesota, and Oklahoma.

The declining percentage of in-state freshmen at KU is actually less substantial than that at some other state universities. Here are a few examples from nearby states, drawing on data from the Chronicle analysis.

| University | 2002 | 2022 | Change (in %pts.) |

|---|---|---|---|

| Colorado | 54.9 | 53.6 | -1.3 |

| Iowa | 59.5 | 53.8 | -5.7 |

| Nebraska | 82.9 | 73 | -9.9 |

| KU | 69.1 | 57.6 | -11.5 |

| Missouri | 82.6 | 69.9 | -12.7 |

| South Dakota | 67 | 52.8 | -14.2 |

| Illinois | 89.2 | 71.4 | -17.8 |

| Indiana | 65.1 | 52.3 | -17.8 |

| Ohio State | 84.6 | 66.7 | -17.9 |

| Wisconsin | 64.2 | 43.8 | -20.4 |

| Oklahoma | 76.3 | 52.9 | -23.4 |

| Arkansas | 80.5 | 39.3 | -41.2 |

If the trends that Selingo indentified hold, KU could see continued growth among out-of-state students, especially those with family incomes of $160,000 and up. The trends also suggest that attracting those students will require higher levels of financial aid, admission to the honors program, and opportunities to work with individual faculty members. In other words, out-of-state students who are rejected by the Ivy League and similar highly ranked schools expect more perks from KU and other state flagship universities.

The sudden growth has brought in additional money to the university, but Jeff DeWitt, KU's chief financial officer, said in a presentation in November that the university had spent millions of additional dollars on scholarships, instructors, advisors, and housing.

"Record enrollment is not free," DeWitt said.

The trends that are benefiting KU and other state flagship universities have made recruitment more difficult at regional universities, the Chronicle reports. Among Kansas regents universities, for instance, only KU (4.1%) and Wichita State (5.1%) have increased enrollment over the past decade. Three others have had dramatic decreases: Pittsburg State (-25.7%), Emporia State (-25.1%), and K-State (-21.5%). Fort Hays State’s enrollment fell 5.7% during the same period. (I’ve excluded the medical center and K-State’s veterinary medicine program, both of which have increased in enrollment but are still relatively small.)

All of that portends a very different look to higher education in Kansas in the coming years.

Are student-athletes employees?

A case before the National Labor Relations Board could force colleges and universities to designate athletes as employees and to pay them as such, Politico reports.

Depending on your perspective, that could either give student-athletes what they are rightfully owed or lead to the collapse of college sports, Politico says.

One passage from the Politico article offered an interesting interpretation on how colleges and universities have looked at athletes:

Pro-labor advocates argue that schools’ “student-athlete” designation is a legal term of art originally designed to shield institutions from player workers’ compensation claims. It deprives competitors of fair compensation for their talents or influence over the system that governs much of their day-to-day college experience, they note.

The NCAA and colleges and universities say, however, that college sports would not survive in their current form if designations were changed. As a result, Politico said, they may seek intervention from Congress if the NLRB forces them to pay athletes.

A ruling is expected in the early spring.

Doug Ward is an associate director at the Center for Teaching Excellence and an associate professor of journalism and mass communications.

It’s a new semester. Do you know where the polar bear is?

We don’t know the last time the first day of classes was canceled.

We’re guessing it was January 1892, when the temperature fell to minus 23, the bottoms of thermometers shattered, and students started using the phrase “froze my bottom off” (or something approximating that).

Of course, everyone was hardier back then, having to walk five miles to campus barefoot through the snow and fend off wolves with their bare, frostbitten hands and all. At least that’s what our elders told us. So everyone may have just shrugged off the lethally cold temperatures in 1892 and showed up for class as usual.

Of course, everyone was hardier back then, having to walk five miles to campus barefoot through the snow and fend off wolves with their bare, frostbitten hands and all. At least that’s what our elders told us. So everyone may have just shrugged off the lethally cold temperatures in 1892 and showed up for class as usual.

Unfortunately, Pupil magazine didn’t exist then, so we may never know. Thankfully, it does exist today, and we have a new issue available! (No applause necessary. Our frozen fingers and toes and ears and hair follicles are tender, too.) After hunkering down in a perpetual shiver for five straight days, you no doubt need a laugh.

Please don’t laugh too hard, though. The polar bear may hear you.

Polar bear?

Shh. Have a great start to the semester!

Doug Ward is associate director of the Center for Teaching Excellence and an associate professor of journalism and mass communications.

What we’ve learned from a year of AI

A year after the release of a know-it-all chatbot, educators have yet to find a satisfying answer to a nagging question: What are we supposed to do with generative artificial intelligence?

One reason generative AI has been so perplexing to educators is that there is no single step that all instructors can take to make things easier. Here are a few things what we do know, though:

The sudden rise of generative AI has felt like the opening of a Pandora’s box Students are using generative AI in far larger numbers than faculty, and some are using it to complete all or parts of assignments. A recent Turnitin poll said 22% of faculty were using generative AI, compared with 49% of students.

- Students in other developed countries are far more likely to use generative AI than students in the U.S., two recent polls suggest.

- Students are as conflicted as faculty about generative AI, with many worried about AI’s impact on jobs, thinking, and disinformation.

- Many faculty say that students need to know how to use generative AI but also say they have been reluctant to use it themselves.

- Detectors can provide information about the use of generative AI, but they are far from flawless and should not be the sole means of accusing students of academic misconduct.

Perhaps the biggest lesson we have learned over the past year is that flexibility in teaching and learning is crucial, especially as new generative AI tools become available and the adoption of those tools accelerates.

We don’t really have an AI problem

It’s important to understand why generative AI has made instructors feel under siege. In a forthcoming article in Academic Leader, I argue that we don’t have an AI problem. We have a structural problem:

Unfortunately, the need for change will only grow as technology, jobs, disciplines, society, and the needs of students evolve. Seen through that lens, generative AI is really just a messenger, and its message is clear: A 19th-century educational structure is ill-suited to handle changes brought on by 21st-century technology. We can either move from crisis to crisis, or we can rethink the way we approach teaching and learning, courses, curricula, faculty roles, and institutions.

That’s not a message most faculty members or administrators want to hear, but it is impossible to ignore. Colleges and universities still operate as if information were scarce and as if students can learn only from faculty members with Ph.D.s. The institutional structure of higher education was also created to exclude or fail students deemed unworthy. That’s much easier than trying to help every student succeed. We are making progress at changing that, but progress is slow even as change accelerates. I’ll be writing more about that in the coming year.

Faculty and staff are finding ways to use AI

Many instructors have made good use of generative AI in classes, and they say students are eager for such conversations. Here are a few approaches faculty have taken:

- Creating AI-written examples for students to critique.

- Allowing students to use AI but asking them to cite what AI creates and separately explain the role AI played in an assignment.

- Having students use AI to create outlines for papers and projects, and refining goals for projects.

- Allowing full use of AI as long as students check the output for accuracy and edit and improve on the AI-generated content.

- Having students design posters with AI.

- Using examples from AI to discuss the strengths and weaknesses of chatbots and the ethical issues underlying them.

- Using paper and pencils for work in class. In recent discussions with CTE ambassadors, the term “old school” came up several times, usually in relation to bans on technology. As appealing as that may seem, that approach can put some students at a disadvantage. Many aren’t used to writing by hand, and some with physical impediments simply can’t.

- For non-native English speakers, generative AI has been a confidence-builder. By evaluating their writing with a grammar checker or chatbot, they can improve phrasing and sentence construction.

- Some faculty members say that generative AI saves time by helping them create letters of recommendation, event announcements, and case studies and other elements for class.

Sara Wilson, an associate professor of mechanical engineering and a CTE faculty fellow, uses what I think is probably the best approach to AI I’ve seen. In an undergraduate course that requires a considerable amount of programming, she allows students to use whatever tools they wish to create their coding. She meets individually with each student – more than 100 of them – after each project and asks them to explain the concepts behind their work. In those brief meetings, she said, it is fairly easy to spot students who have taken shortcuts.

Like faculty, students are often conflicted

Many students seem as conflicted as faculty over generative AI. In a large introductory journalism and mass communications class where I spoke late this semester, I polled students about their AI use. Interestingly, 21% said they had never used AI and 45% said they had tried it but had done little beyond that. Among the remaining students, 27% said they used AI once a week and 7% said they used it every day. (Those numbers apply only to the students in that class, but they are similar to results from national polls I mention above.)

In describing generative AI, students used terms like “helpful,” “interesting,” “useful” and “the future,” but also “theft,” “scary,” “dangerous,” and “cheating.” Recent polls suggest that students see potential in generative AI in learning but that they see a need for colleges and universities to change. In one poll, 65% of students said that faculty needed to change the way they assess students because of AI, the same percentage that said they wanted faculty to include AI instruction in class to help them prepare for future jobs.

Students I’ve spoken with describe AI as a research tool, a learning tool, and a source of advice. Some use AI as a tutor to help them review for class or to learn about something they are interested in. Others use it to check their writing or code, conduct research, find sources, create outlines, summarize papers, draft an introduction or a conclusion for a paper, and help them in other areas of writing they find challenging. One CTE ambassador said students were excited about the possibilities of generative AI, especially if it helped faculty move away from “perfect grading.”

Time is a barrier

For faculty, one of the biggest challenges with AI is time. We’ve heard from many instructors who say that they understand the importance of integrating generative AI into classes and using it in their own work but that they lack the time to learn about AI. Others say their classes have so much content to cover that working in anything new is difficult.

Instructors are also experiencing cognitive overload. They are being asked to focus more on helping students learn. They are feeling the lingering effects of the pandemic. In many cases, class sizes are increasing; in others, declining enrollment has created anxiety. Information about disciplines, teaching practices, and world events flows unendingly. “It’s hard to keep up with everything,” one CTE ambassador said.

Generative AI dropped like a boulder into the middle of that complex teaching environment, adding yet another layer of complexity: Which AI platform to use? Which AI tools? What about privacy? Ethics? How do we make sure all students have equal access? The platforms themselves can be intimidating. One CTE ambassador summed up the feelings of many I’ve spoken with who have tried using a chatbot but weren’t sure what to do with it: “Maybe I’m not smart enough, but I don’t know what to ask.”

We will continue to provide opportunities for instructors to learn about generative AI in the new year. One ongoing resource is the Generative AI and Teaching Working Group, which will resume in the spring. It is open to anyone at KU. CTE will also be part of a workshop on generative AI on Jan. 12 at the Edwards Campus. That workshop, organized by John Bricklemyer and Heather McCain, will have a series of sessions on such topics as the ethics of generative AI, strategies for using AI, and tools and approaches to prompting for instructors to consider.

We will also continue to add to the resources we have created to help faculty adapt to generative AI. Existing resources focus on such areas as adapting courses to AI, using AI ethically in writing assignments, using AI as a tutor, and handling academic integrity. We have also provided material to help generate discussion about the biases in generative AI. I have led an effort with colleagues from the Bay View Alliance to provide information about how universities can adapt to generative AI. The first of our articles was published last week in Inside Higher Ed. Another, which offers longer-term strategies, is forthcoming in Change magazine. Another piece for administrators will be published this month in Academic Leader.

Focusing on humanity

If generative AI has taught us anything over the past year, it is that we must embrace humanity in education. Technology is an important tool, and we must keep experimenting with ways to use it effectively in teaching and learning. Technology can’t provide the human bond that Peter Felten talked about at the beginning of the semester and that we have made a priority at CTE. Something Felten said during his talk at the Teaching Summit is worth sharing again:

“There’s literally decades and decades of research that says the most important factor in almost any positive student outcome you can think about – learning, retention, graduation rate, well-being, actually things like do they vote after they graduate – the single biggest predictor is the quality of relationships they perceive they have with faculty and peers,” Felten said.

Technology can do many things, but it can’t provide the crucial human connections we all need.

In an ambassadors meeting in November, Dorothy Hines, associate professor of African and African-American studies and curriculum and teaching, summed it up this way: “AI can answer questions, but it can’t feel.” As educators, she said, it’s important that we feel so that our students learn to feel.

That is wise advice. As we continue to integrate generative AI into our work, we must do so in a human way.

Doug Ward is associate director of the Center for Teaching Excellence and an associate professor of journalism and mass communications.

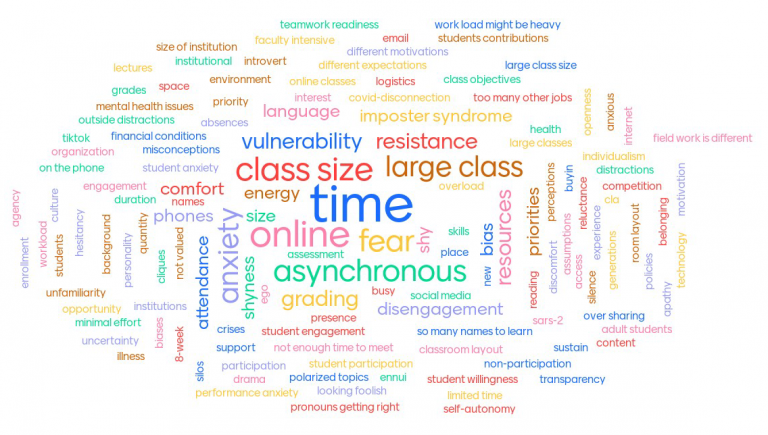

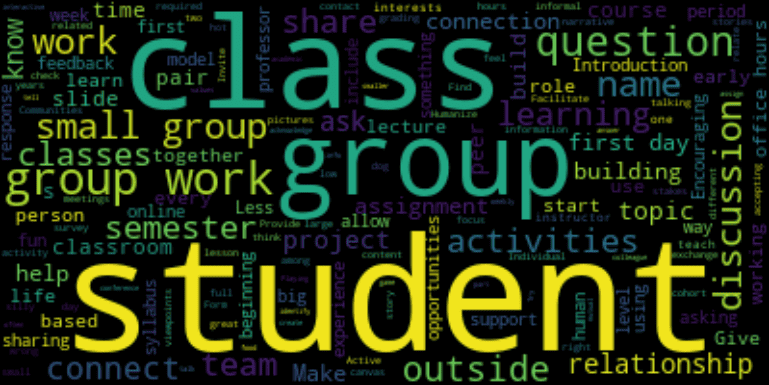

From summit poll, a list of ways to create community in classes

Peter Felten’s keynote message about building relationships through teaching found a receptive audience at this year’s Teaching Summit.

Felten, a professor of history and assistant provost for teaching and learning at Elon University, shared the stories of students who had made important connections with instructors and fellow students while at college. He used those stories to talk about the importance of humanity in teaching and about the vital role that community and connection make in students’ lives.

As part of his talk, Felten encouraged attendees to share examples of how they humanize their classes, asking:

What do you do to build relationships with and among students?

Summit attendees submitted about 140 responses. Felten shared some of them, along with a word cloud, but they were on screen only briefly. I thought it would be useful to revisit those responses and share them in a form that others could draw on.

To do that, I started with a spreadsheet from Felten that provided all the responses. I categorized most of them manually but also had ChatGPT create categories and a word cloud. Some responses had two or three examples, so I broke those into discrete parts. After editing and rearranging the categories and responses, I came up with the following list.

- Use collaborative work, projects, and activities

- Humanize yourself and students

- Learn students’ names

- Use ice breakers or get-to-know-you activities

- Create a comfortable environment and encourage open discussions

- Meet students individually

- Use active learning

- Provide feedback

- Engage students outside of class

Many of the responses could fit into more than one category, and some of the categories could certainly be combined. The nine I ended up with seemed like a reasonable way to bring a wide-ranging list of responses into a more comprehensible form, though.

Here’s that same list again, this time with example responses. It’s worth a look, not only to get new ideas for your classes but to reinforce your approaches to building community.

Use collaborative work, projects and activities

- Pulling up some students’ burning questions for small group discussions and then full class discussion leading to additional explanation and information in class.

- Think-pair-share in large lectures so students have several opportunities to connect with each other in different sized groups.

- Group work in consistent teams.

- We have a small cohort model that keeps students together in a group over two years and allows us to have close relationships with the students naturally.

- Timed “warm-up” conversations in pairs, usually narrative-based, often related to lesson/topic in some way; I always partner with someone, too.

- Form three-person groups early in the semester on easy assignments so the focus is on building relationships with each other.

- Group projects with milestones.

- Team-based learning.

- Using CATME to form student groups, which allows students to work in groups during class and facilitates group work and other interactions outside of class.

- Put students in small groups, give them a problem, ask them to solve it, and have them report back to the class.

- Group work in classrooms and online, in particular.

Humanize yourself and students

- Provide them your story to humanize your experience and how it may relate to theirs.

- Make myself seem more human, less intimidating. Share about myself outside of my role as professor makes students feel more comfortable to approach me and willingness to build that connection.

- I start class by sharing something about myself, especially something where I failed. The intention is to normalize failure and ambiguity. I also have a big Spider-Man poster in my office!

- Casually talking to the students about themselves, not only talking about the class or class-related topics.

- Being approachable by encouraging them to ask questions.

- Find topics of mutual connection – ex. International students and missing food from home.

- I tell my story as a first-generation student from rural America on day one. Try to be a human!

- Admit my own weaknesses and struggles!

- For groups that will be working together throughout the semester, I ask them to identify their values and describe how they will embody those in their work.

- Multidimensional identification of the instructor in the academic syllabus.

- Have students introduce themselves by sharing something very few people know about them. Each student in small classes, or triads in big classes.

- I remind them that the connections they make are the best part of the school.

- Sit down with them the first day of class to get to know them instead of standing in front of class.

- Acknowledge that they are humans with complex lives and being a student is only part of that life.

- In online classes, create weekly Zoom discussion groups that begin with a topic but quickly become personal stories and establish relationships and mutual support.

- Just ask how they feel and be honest.

- Make time and space in every class to collaborate and share something about themselves to help build relationships.

- I often start my classes off telling humorous but somewhat embarrassing moments of mistakes I’ve made in my career and life.

Learn students’ names

- Know their names before the class.

- Learning all students’ names and faces using pics on roll before the first day and making a game with students to see if I can get them right by end of class.

- Use photo rosters to make flashcards and know students’ names in a lecture hall when they show up on first day.

Use ice breaker or get-to-know-you activities

- At the beginning of the semester, I group students and have them come up with a team name. Amazingly, this seems to connect them and build camaraderie.

- I do a survey on the first day of class to ask students about themselves, what they want to do when they graduate, etc. Then I have a starting point to start chatting before class starts.

- Detailed slide on who I am and my path to where I am today, including getting every single question wrong on my first physics exam.

- Each student creates an introduction slide with pictures and information about themselves. Then I create a class slide deck and post on Canvas.

- Introductions in-person AND in Canvas so students can network outside of class with required peer responses.

- Relationship over content: Take the first day of class to focus on building relationships. Then every class period have an activity that focuses on relationship building.

- Circulate, contact, names, stories.

- Asking a brief get-to-know-you question each week

- Online classes: Introductions include “tell me something unique or interesting about you” and my response includes how I connect with / relate to / admire that uniqueness.

- I teach prospective educators. The second week of class I have them “teach” a lesson about themselves. They create a PowerPoint slide, share info, and answer questions from their peers.

- On the syllabus, include fun pictures of things you do outside the classroom and share some hobbies to help connect with students.

- Students introducing other students.

- Ask if a hot dog is a sandwich.

- Find shared experiences with an exercise—e.g., “What places have you been before coming here?”

- Have an email exchange with every student, sharing what our ideal day off is as part of an introductory syllabus quiz.

Meet students individually

- Student meetings at beginning of semester.

- Office hours, sitting among the students.

- Individual student hours meetings twice a semester (it’s a small class).

- Offer students extra credits if they come to my office hours during the first two weeks of class.

- Encouraging open office hours with multiple students to connect across classes and disciplines.

- One-on-ones with students.

- Mandatory early conference w professors.

- Required instructor conference, group work, embedded academic support.

- Coffee hours: informal time for students to meet with me and their peers.

- Allow time for group work when the instructor can talk with the small groups of students.

Use active learning

- Less lecture and more discussion-based activities.

- Less lecturing; being explicit about the values and principles that connect student interests.

- Active learning in classrooms to build connections between students and help them master content.

- Ask more questions than give answers.

- Help students connect the dots between the classroom, social life, professional interests, and their family.

Create a comfortable environment and encourage open discussions

- Questions of opinion – not right or wrong.

- Pulling up some students’ burning questions for small group discussions and then full class discussion, leading to additional explanation and information in class.

- Not just be accepting of different viewpoints, but guide discussions in a way that students are also accepting of each other’s.

- Give students opportunity to debate a low-stakes topic: Are hot dogs sandwiches?

- Model that asking “dumb” questions is OK and where learning happens.

- Group students for low-stakes in-class activities. Give them prompts to help get to know each other.

- Encouraging them to communicate with each other in discussion.

- Accept challenges (acknowledge student viewpoints rather than instant dismissal).

- Role Playing.

- Let them talk about their own interests.

- Conduct course survey often throughout the semester.

- Mindfulness activities.

- Students are more likely to ask friends for help. So in class I highlight their knowledge and encourage them to share.

- Food!

- Each class period starts with discussion about a fun/silly topic and a group dynamics topic.

- Good idea from colleague: assign someone the role of asking questions in small groups.

Provide feedback

- Providing feedback is showing care and support for students.

- Share work, peer feedback.

- Peer evaluations to practice course skills.

- Personal responses with assignments and group learning efforts.

- Provide individualized narrative feedback on assignments.

Engage students outside of class

- Invite students for outside-class informal cultural activities and events.

- Facilitate opportunities for students to connect outside of class time.

- In-person program orientations from a department level.

As the academic year begins, think community and connection

In a focus group before the pandemic, I heard some heart-wrenching stories from students.

One was from a young, Black woman who felt isolated and lonely. She mostly blamed herself, but the problems went far beyond her. At one point, she said:

“There’s some small classes that I’m in and like, some of my teachers don’t know my name. I mean, they don’t know my name. And I just, I kind of feel uncomfortable, because it’s like, if there’s some kids gone but I’m in there, I just want them to know I’m here. I don’t know. It’s just the principle that counts me.”

I thought about that young woman as I listed to Peter Felten, the keynote speaker at last week’s Teaching Summit. Felten, a professor of history at Elon University, drew on dozens of interviews he had conducted with students, and connected those to years of research on student success. Again and again, he emphasized the importance of connecting with students and helping students connect with each other. That can be challenging, he said, especially when class sizes are growing. The examples he provided made clear how critical that is, though.

“There’s literally decades and decades of research that says the most important factor in almost any positive student outcome you can think about – learning, retention, graduation rate, well-being, actually things like do they vote after they graduate – the single biggest predictor is the quality of relationships they perceive they have with faculty and peers,” Felten said.

Moving beyond barriers

Felten emphasized that interactions between students and instructors are often brief. It would be impossible for instructors to act as long-term mentors for all their students, but students often need just need reassurance or validation: hearing a greeting from the instructor, having the instructor remember their name, getting meaningful feedback. Quality matters more than quantity, Felten said. The crucial elements are making human connections and helping students feel that they belong.

Creating that interaction isn’t always easy, Felten said. He gave an example of a first-generation student named Oliguria, whose parents had emphasized the importance of independence.

“She said she had so internalized this message from her parents that you have to do college alone, that when she got to college she thought it was cheating to ask questions in class,” Felten said. “That was her word: cheating. It’s cheating to go to the writing center, cheating to go to the tutoring center.”

Instructors need to help students move past those types of beliefs and to see the importance of asking for help, Felten said.

Another challenge is helping students push past impostor syndrome, or doubts about abilities and feelings that someone will say they don’t belong. That is especially prevalent in academia, Felten said. Others around you can seem so poised and so knowledgeable. That can make students feel that they don’t belong in a class, a discipline, or even in college because they have learned to feel that “if you’re struggling, there must be something wrong with you.”

That misconception can make it difficult for students to recover from early stumbles and to appreciate difficulty as an important part of learning.

“I don’t know about your experience,” Felten said. “What I do is hard. But we don’t tell students that, right? They think if it’s hard, there’s something wrong with them.”

Because of that, students hesitate to ask for help and don’t want their instructors to know about their struggles. As one student told Felten, “pride gets in the way of acknowledging that I don’t understand something.”

Small interactions with huge consequences

Getting past that pride can make a huge difference, Felten said. He gave the example of Joshua, a 30-year-old community college student who nearly dropped out because he found calculus especially difficult.

“I suddenly began to have these feelings like, I didn’t belong in this class, that my education, what I was trying to achieve wasn’t possible,” Joshua told Felten. “And my goals were just obscenely further away than I thought they were.”

He spoke to his professor, who told him to go home and read about impostor syndrome. That helped Joshua feel more confident. He sought out a tutor and eventually got an A in the class.

The professor, Felten said, was an adjunct who taught only one semester. Joshua met with him only once, but that meeting had far-reaching effects. Joshua completed his associate’s degree and later graduated from Purdue.

Felten called Joshua’s professor “a mentor of the moment.” That means “paying attention to the person right in front of you and being able to give them the right kind of challenge, the right kind of support, the right kind of guidance that they need right then.”

Felten also talked about another student, José, who wanted to become a nurse. José loved life sciences but bristled at a requirement to take a course outside his major. He signed up for a geology class simply because it fit into his schedule, and he vowed to do as little as possible. Ultimately, Felten said, the class ended up being “one of the most powerful classes he had taken.”

José told Felten: “My professor made something as boring as rocks interesting. The passion she had, her subject, was something that she loved.”

José never got to know the professor personally, but the way she conducted the class – interactively and passionately – was transformative, Felten said.

“The most important thing is that this class became a community,” José told Felten. “She made us interact with each other and with the subject. It just came together because of her passion.”

The importance of good feedback

Felten also talked about Nellie, a student who started college just as the pandemic hit. In a writing course, she liked the instructor’s feedback on assignments so much that she later took another class from the same professor, even though she never talked directly to her.

“She would have this little paragraph in the comments saying, you did this super well in your paper. And that little bit of encouragement, even though I’m not face to face with this teacher at all, made a world of difference to me,” Nellie told Felten. “We’ve never met in person or even had a conversation, but she has made a huge positive difference in my education.”

Instructors can easily overlook the impact of something like feedback on written assignments, but Felten said they can be validating. He cited a study from a large Australian university that found that the biggest predictor of undergraduate well-being was the quality of teaching. The authors of the study said students weren’t expecting their instructors to be counselors or therapists or even long-term mentors.

“They’re asking us to do our job well, which is to teach well, to assess clearly and to teach as if learning is an interactive human thing, to connect with each other and with the material,” Felten said.

The importance of community

I’ll go back to the young woman I interviewed a few years ago, and how isolated she felt in her classes and how alone she felt outside classes.

“I think it’s just really interesting,” she said. “I see a lot of different faces every day, but I still feel so isolated. And I know sometimes that’s like my problem. But I do feel like I should know way more people. And I just, I want to know more people.”

Her story was heart-wrenching. She and another student – a white transfer student – talked about getting “vibes” from classmates that pushed them deeper into isolation and made life at the university challenging. Neither had gained a sense of belonging at the university, even though both desperately sought a sense of connection.

In his talk at the Teaching Summit, Felten said the antidote to that was to create community in our classes.

“The most important place for relationships in college is the classroom,” Felten said. “If they don’t happen there, we can’t guarantee they happen for all students.”

He also added later: “If we know that’s true, why don’t we organize our courses, why don’t we organize our curriculum, why don’t we organize our programs, our universities as if that was a central factor in all of the good things that can happen here?”

Why indeed.

Doug Ward is associate director of the Center for Teaching Excellence and an associate professor of journalism and mass communications at the University of Kansas.