How to lessen concerns about generative AI and academic integrity

How to lessen concerns about generative AI and academic integrity

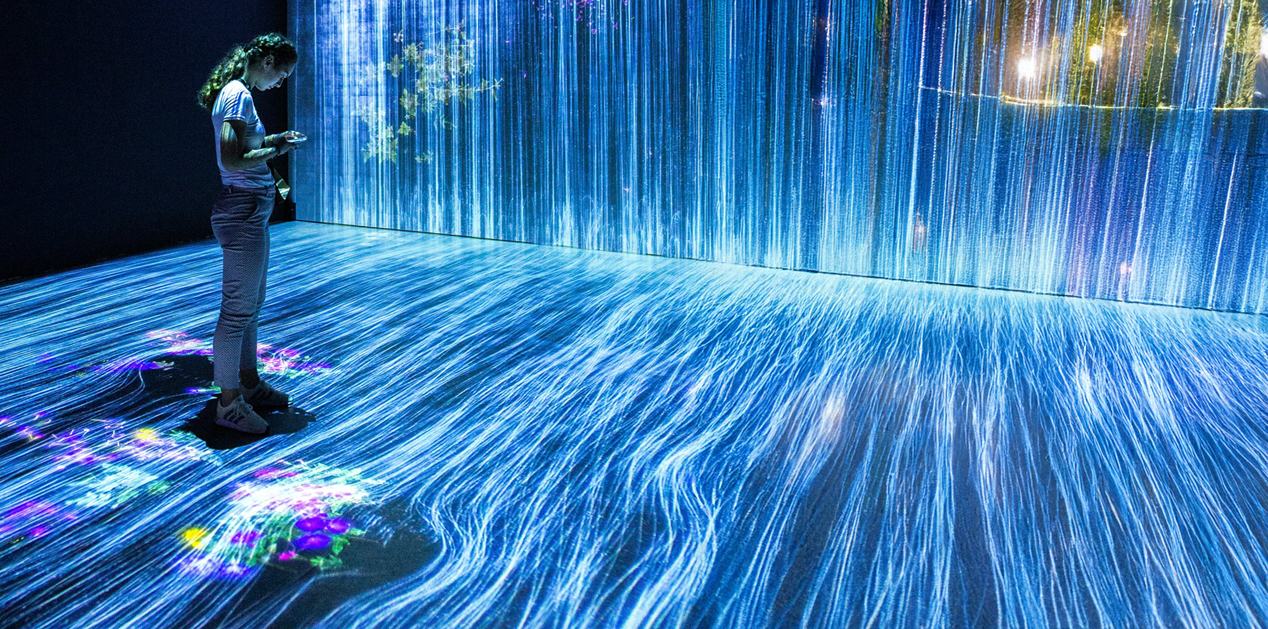

Mahdis Mousavi, Unsplash

By Doug Ward

A new survey from the Association of American Colleges and Universities emphasizes the challenges instructors face in handling generative artificial intelligence in their classes.