Nearly a decade ago, the Associated Press began distributing articles written by an artificial intelligence platform.

Not surprisingly, that news sent ripples of concern among journalists. If a bot could turn structured data into comprehensible – even fluid – prose, where did humans fit into the process? Did this portend yet more ominous changes in the profession?

I bring that up because educators have been raising many of the same concerns today about ChatGPT, which can not only write fluid prose on command, but can create poetry and computer code, solve mathematical problems, and seemingly do everything but wipe your nose and tuck you into bed at night. (It will write you a bedtime story if you ask, though.)

In the short term, ChatGPT definitely creates challenges. It drastically weakens approaches and techniques that educators have long used to help students develop foundational skills. It also arrives at a time when instructors are still reeling from the pandemic, struggling with how to draw many disengaged students back into learning, adapting to a new learning management system and new assessment expectations, and, in most disciplines, worrying about the potential effects of lower enrollment.

In the long term, though, we have no choice but to accept artificial intelligence. In doing so, we have an opportunity to develop new types of assignments and assessments that challenge students intellectually and draw on perhaps the biggest advantage we have as educators: our humanity.

Lessons from journalism

That was clearly the lesson the Associated Press learned when it adopted a platform developed by Automated Insights in 2014. That platform analyzes data and creates explanatory articles.

For instance, AP began using the technology to write articles about companies’ quarterly earnings reports, articles that follow a predictable pattern:

The Widget Company on Friday reported earnings of $x million on revenues of $y million, exceeding analyst expectations and sending the stock price up x%.

It later began using the technology to write game stories at basketball tournaments. Within seconds, reporters or editors could make basic stories available electronically, freeing themselves to talk to coaches and players, and create deeper analyses of games.

The AI platform freed business and financial journalists from the drudgery of churning out dozens of rote earnings stories, giving them time to concentrate on more substantial topics. (For a couple of years, I subscribed to an Automated Insights service that turned web analytics into written reports. Those fluidly written reports highlighted key information about site visitors and provided a great way to monitor web traffic. The company eventually stopped offering that service as its corporate clients grew.)

I see the same opportunity in higher education today. ChatGPT and other artificial intelligence platforms will force us to think beyond the formulaic assignments we sometimes use and find new ways to help students write better, think more deeply, and gain skills they will need in their careers.

As Grant Jun Otsuki of Victoria University of Wellington writes in The Conversation: “If we teach students to write things a computer can, then we’re training them for jobs a computer can do, for cheaper.”

Rapid developments in AI may also force higher education to address long-festering questions about the relevance of a college education, a grading system that emphasizes GPA over learning, and a product-driven approach that reduces a diploma to a series of checklists.

So what can we do?

Those issues are for later, though. For many instructors, the pressing question is how to make it through the semester. Here are some suggestions:

Have frank discussions with students. Talk with them about your expectations and how you will view (and grade) assignments generated solely with artificial intelligence. (That writing is often identifiable, but tools like OpenAI Detector and CheckforAI can help.) Emphasize the importance of learning and explain why you are having them complete the assignments you use. Why is your class structured as it is? How will they use the skills they gain? That sort of transparency has always been important, but it is even more so now.

Students intent on cheating will always cheat. Some draw from archives at greek houses, buy papers online or have a friend do the work for them. ChatGPT is just another means of avoiding the work that learning requires. Making learning more apparent will help win over some students, as will flexibility and choices in assignments. This is also a good time to emphasize the importance of human interaction in learning.

Build in reflection. Reflection is an important part of helping students develop their metacognitive skills and helping them learn about their own learning. It can also help them understand how to integrate AI into their learning processes and how they can build and expand on what AI provides. Reflection can also help reinforce academic honesty. Rather than hiding how they completed an assignment, reflection helps students embrace transparency.

Adapt assignments. Create assignments in which students start with ChatGPT and then have discussions about strengths and weaknesses. Have students compare the output from AI writing platforms, critique that output, and then create strategies for building on it and improving it. Anne Bruder offeres additional suggestions in Education Week, Ethan Mollick does the same on his blog, and Anna Mills has created a Google Doc with many ideas (one of a series of documents and curated resources she has made available). Paul Fyfe of North Carolina State provides perhaps the most in-depth take on the use of AI in teaching, having experimented with an earlier version of the ChatGPT model more than a year ago. CTE has also created an annotated bibliography of resources.

We are all adapting to this new environment, and CTE plans additional discussions this semester to help faculty members think through the ramifications of what two NPR hosts said was startlingly futuristic. Those hosts, Greg Rosalsky and Emma Peaslee of NPR’s Planet Money, said that using ChatGPT “has been like getting a peek into the future, a future that not too long ago would have seemed like science fiction.”

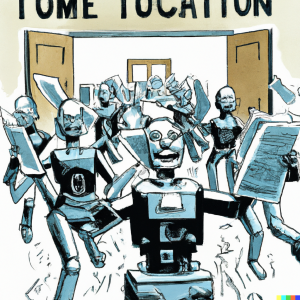

To that I would add that the science fiction involves a robot that drops unexpectantly into the middle of town and immediately demonstrates powers that elicit awe, anxiety, and fear in the human population. The robot can’t be sent back, so the humans must find ways to ally with it.

We will be living this story as it unfolds.

Tagged AI, artificial intelligence, future of higher education, teaching and technology