The Evolution of a Term Project: Iterative Course Redesign to Enhance Student Learning

An accessible version of the documents on this site will be made available upon request. Contact cte@ku.edu to request the document be made available in an accessible format.

A psychology professor modifies an upper-level psychology course to enhance students’ analysis, integration and application of empirical research in a term project.

—Andrea Follmer Greenhoot (2010)

Portfolio Overview

Cognitive Development (PSYC 430) is a survey course on the mental changes that take place from birth through adolescence. The main goals of this course are: 1) To promote students’ understanding of the development of cognitive abilities between infancy and adolescence; 2) To introduce students to the use of research methods in studying cognitive development; 3) To teach students how to apply newly learned concepts to novel and meaningful settings; and 4) To foster the development of skills that will facilitate further learning and reasoning, including information literacy, critical thinking, argument development, and verbal and written expression.

One of the requirements of this course is to write a paper on a cognitive development topic using primary research sources. The project is designed to integrate a number of skills; students must identify and locate appropriate sources, read and evaluate psychological research, apply their research findings to real-world situations, and write a clear and cohesive response to the question. However, the first offerings of this course indicated that students were having difficulties with each step of this process, so I have made a number of changes in order to better support the development of the skills required for successful completion of the project. From 2008 to 2011, my work on this course was accelerated by participation in a project, funded by the Spencer and Teagle foundations, aimed at enhancing students’ critical thinking and writing skills in large classes. This portfolio describes my changes to the course both before and after the Spencer/Teagle course redesign project.

During the first offering of the course, in Fall 1999, students were initially required to find and analyze five relevant empirical articles for the term project. However, I found that students had a difficult time identifying and evaluating scholarly sources. Furthermore, they also had difficulty reading, analyzing, and integrating the information provided by empirical articles, and many struggled with basic writing mechanics.

Thus, across the subsequent offerings of the course, I have made a number of changes to the term project assignment. These included: (1) reducing the number of articles that students are required to integrate, (2) developing a detailed grading rubric, (3) breaking the assignment down into multiple subcomponents that are completed throughout the semester and providing support and feedback at each stage, and (4) as of Spring 2007, partnering with the Writing Center, the KU Libraries and graduate student fellows who received supplemental training in those units.

There were several indications that the latest course modifications were successful. In terms of the supporting assignments, not one student required individual assistance locating and selecting his or her empirical resources this past semester, and no one submitted articles from inappropriate sources (e.g., popular media). Furthermore, I was also very impressed with the insightful and constructive feedback the students gave each other during the peer review process; most students provided high-level comments on their peers’ writing mechanics, clarity, detail and conclusions.

While overall grades on the term project have changed very little from year to year, in part because my expectations for what constitutes “outstanding” or “adequate” work have increased with the level of support I am providing to students, comparisons of actual student products from year to year illustrate that the papers, particularly the “A papers,” were clearer and more sophisticated during the most recent offerings (supported by team-design) than they were during previous semesters. In particular, I observed big improvements in the ability to synthesize diverse research findings and draw appropriate conclusions, and in the application of the research findings. These shifts are especially noteworthy because at the same time that I increased support and feedback to the students, I also increased the number of articles students were required to synthesize and increased the rigor of the grading rubrics. Thus, my students are performing better on an even more sophisticated learning task.

I was very happy with students’ improved information literacy skills and the increased level of synthesis after the most recent course modifications and instructional partnership. Student work is more closely approximating the types of upper-level work that I think should be exhibited. Therefore, this is an approach that I will continue to use. Furthermore, my current approach represents an accumulation of many small changes that I have made across several years of teaching and “tweaking” this course, which has made the course refinement process quite manageable. However, there are still several areas that need further refinement and exploration.

First, a large subset of the students had a negative assessment of the peer-review process. In future offerings, it might be helpful to make these connections more explicit to the students. A second issue is that the writing consultants were not well-utilized. The GTA and I provided very specific feedback and suggestions on the students’ optional rough drafts, which might have led students to believe that the writing consultants were superfluous. In the most recent offering of the course (Fall 2007), I restructured the manner in which we assessed rough drafts, such that the GTA and I focused on evaluating the papers, and encouraged students to visit the Writing Center consultants for assistance with problem-solving. This modification did lead to increased visits to the writing consultants. Finally, I would like to continue gradually increasing the difficulty level of this assignment to challenge students to produce more sophisticated work.

PSYC 430 Cognitive Development (.docx) is a survey course on the mental changes that take place from birth through adolescence. The course covers the development of vision and other perceptual abilities, attention, memory, language, problem solving and reasoning, intelligence, and social cognition, or thinking about social phenomena. As this is an upper-level course in the Psychology curriculum; most students are juniors or seniors. The course normally enrolls between 80 and 100 students per semester.

The main goals of this course are:

- To promote students’ understanding of the development of cognitive abilities between infancy and adolescence;

- To introduce students to the use of research methods in studying cognitive development;

- To teach students how to apply newly learned concepts to novel and meaningful settings;

- To foster the development of skills that will facilitate further learning and reasoning, including information literacy, critical thinking, argument development, and verbal and written expression.

One course requirement is to write a paper on a topic in cognitive development using primary sources from the psychological research literature. The paper takes the form of a hypothetical advice column in a parenting magazine. Students are presented several “reader questions(pdf)” related to cognitive development and are instructed to write a response to one question using psychological research. To complete the project, students must identify and locate appropriate sources, read and evaluate psychological research, apply their research findings to real-world situations, and write a clear and cohesive response to the question. This project is designed to integrate a number of the skills I want students to take away from this class. However, during the first offerings of this course, I found that students had difficulties with each step of this process. Therefore, I have made a number of changes across multiple offerings in order to better scaffold, or support the development of, the skills required for successful completion of the project.

To supplement the material below, please see my complete timeline of course changes (.pptx).

When I initially implemented the term project in Fall 1999, I required students to find five relevant empirical articles and synthesize their results in their papers. To help them locate appropriate articles, I provided detailed written instructions on how to find articles through PsycINFO, a psychological research database. I also required students to turn in their references and an outline of the paper early in the semester, and I provided feedback regarding their progress. However, students had a difficult time identifying and evaluating scholarly sources, frequently submitting articles from popular media or empirical articles that were only vaguely related to the topic. Furthermore, they also had difficulty reading, analyzing, and integrating the information provided by empirical articles, and many struggled with basic writing mechanics. Thus, over the years I have made a number of modifications to help students develop the skills involved in successful completion of the project, including reducing the number of sources that students were required to use, increasing the number of supporting assignments that were assigned throughout the semester, and increasing the number of resources available to the students.

Fall 2000 to Fall 2002: Simplification and scaffolding

By Fall 2000, I had reduced the number of articles that students were required to analyze in their papers to two, one of which I identified and one of which they identified and located. I encouraged students to submit rough drafts of their papers for feedback prior to the final due date, and approximately 20% of the class did so in any given semester. I also developed and distributed a detailed grading rubric to make my expectations for the final paper more transparent to the students, as well as to the GTA who assisted with grading. Finally, I asked students to analyze a brief research article as part of an in-class assignment (pdf), to model the steps involved in evaluating and summarizing empirical writing and illustrate the level of analysis I wanted them to exhibit.

Fall 2003 to Spring 2006: Efforts to improve data gathering

Many of the changes I made between 2003 and 2006 targeted students’ abilities to identify and evaluate empirical articles in the psychological literature. Beginning in Fall 2003, I made source identification a larger percentage of the grade on the project (from 10% to 25%), because students did not seem to be taking this assignment step seriously enough. I also required them to explain in writing how the article was related to the paper topic, to prompt them to contemplate this issue as they made their article selections. Furthermore, I asked the Psychology Subject Specialist in the KU Libraries (Tami Albin and later Erin Ellis) to conduct an in-class tour of PsycINFO.

These changes led to noticeable improvements in students’ performance on the project. During this time period, most students selected appropriate scholarly sources, although they were not always optimally relevant to the topic. Furthermore, the students produced solid analyses and summaries of their empirical articles. Nevertheless, I was still observing some student difficulties. First, the in-class PsycINFO tutorial was not meeting all the students’ needs, as the GTA and I were still meeting with 15–20% of the students individually to help them find appropriate and relevant articles. Second, the students seemed to devote most of their efforts to the summary and evaluation of the individual articles and produced comparatively weak syntheses of the research findings. Third, many continued to use poor writing mechanics and organization. Finally, because the project was based on only two empirical articles, it did not represent the level of scholarly work I wanted to see in upper-level students.

Spring 2007 to Fall 2010: Additional scaffolding and team design

Between 2007 and 2010, I made another wave of course revisions (pdf), accelerated by a team-design project supported by the Spencer and Teagle foundations. This project partnered faculty with specialists from the Libraries and the Writing Center and with graduate student fellows (GSFs) who received supplemental training in those units. Instruction teams collaborated on course and assignment design and also consulted with students. Working with my team (which over the years included Erin Ellis of the Libraries, Moira Ozias and Terese Thonus of the Writing Center, and several excellent psychology graduate students), I broke the project into additional stages and designed new learning activities to help students progressively build their skills over the semester. At the same time, I increased the number of required sources, to see if I could leverage this increased assistance to challenge students to produce higher-level work. The staged activities can be broadly organized into three major phases implemented across the semester:

Early stages: Information literacy

Early in the semester, Erin Ellis and the GSFs led hands-on literature search sessions in a computer instruction lab. The labs were held over two class periods, with half of the students going to a regular class session and half of going to the lab each class period. Erin also offered a follow-up workshop to assist students who had additional questions about the process. Two weeks after the initial session, students submitted the first pages of their articles (to ensure that they had tracked down the full article) and a paragraph explaining why they had selected each source, to prompt them to reflect on and monitor the quality of their choices.

Middle stages: Critical reading

In the intermediate stages, we incorporated additional modeling and guidance in reading and evaluating empirical articles, beginning with a paper describing a highly flawed study before moving on to a paper describing a study with more subtle limitations and caveats. We designed these activities to make visible how an expert processes an empirical article, and it was repeated with different articles throughout the semester. We also required students to write brief summaries and evaluations of their approved advice column sources well before the paper was due. The goal was to “free up resources” for the difficult task of synthesizing multiple empirical articles by getting them to critically read each article early in the process. The students brought their summaries to an in-class peer workshop (pdf) and reviewed each others’ work in small groups organized by topic.

Late stages: Synthesis, application, and writing

To target synthesis and application, in the second half of the peer workshop, students discussed how to integrate their research into conclusions for their papers. This gave them an opportunity to develop their ideas about the synthesis and implications of their research with others who are knowledgeable about their topic, but were informed by different sources. I hoped that these discussions would help students identify broader patterns and implications from the articles, and also come to realize that empirically-supported answers to real-world questions are not always clear-cut. We also developed an assignment that asked students to apply the paper rubric (pdf) to sample papers. I had always distributed the rubric with the assignment instructions, but it was not clear that students attended to it enough to really grasp what high quality research synthesis and written argument development look like. By asking them to apply it to someone else’s work I hoped to improve their understanding of these dimensions before they wrote their own papers. Finally, to support writing skill, we encouraged all students to meet with the GSFs for individual consultations on rough drafts, and provided opportunities for revision of the final paper.

There were several indications that the latest course modifications were successful. These indicators included student performance on the progressive assignments and the final product, student commentary on their peers’ article summaries generated during the peer review session, and student responses to a an end-of-semester survey about the term project.

Article Selection

The Literature Search Lab was a clear success. Whereas the GTA and I used to provide individual assistance with article selection to 10 to 15 students each semester, following the Literature Search Lab not one student required individual assistance. Moreover, no students submitted articles from non-scholarly sources, and the vast majority identified relevant articles on the first attempt.

Article Summaries, Peer Review, and Analysis

Overall, I was very impressed with the insightful and constructive feedback the students gave each other during the peer review process. As illustrated by these examples, most students provided high-level comments on their peers’ writing mechanics, clarity, detail and conclusions. Because of the level of commentary the students provided each other, the GTAs and I were required to do less of the reviewing ourselves, which made the process more manageable for us. The students were also very engaged in the analysis portion of this class period, and I observed several groups actively debating the implications of their research. Check the links below for examples of the peer review assignments.

Final term papers

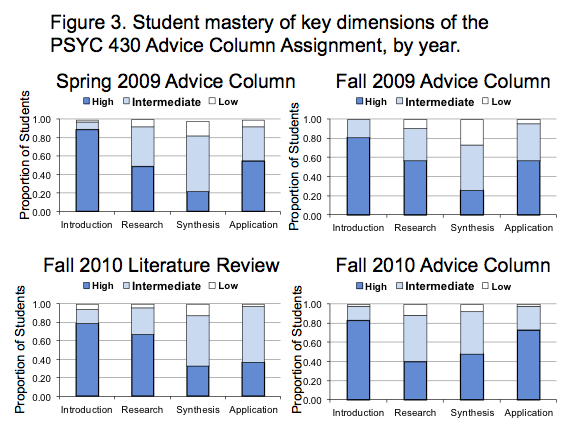

Grades on the term project (and supporting assignments) have changed very little from year to year, in part because my expectations for what constitutes “outstanding” or “adequate” work have increased with the level of support given to students. (Click to see the grade distributions for Fall 2005 (Figure 1) and Spring 2007 (Figure 2)). However, after the team-design modifications, fewer students produced very poor work (i.e., D or F papers). Furthermore, as illustrated in Figure 3 below, comparisons of actual student products from year to year reveal that the papers were clearer and more sophisticated. Over the last few offerings I observed particular improvement in students’ ability to synthesize diverse research findings and draw appropriate conclusions, even though they were actually required to use more empirical articles than in previous semesters. Before team-design few students produced a high level synthesis, whereas in the first three team-design offerings 20–25% did so.

In the most recent semester (Fall 2010) we made further revisions based on these assessments of student learning, such as asking students to write a literature review paper before writing a much briefer advice column, and shifting class time away from information delivery via lecture to the analysis and synthesis of information delivered via the reading. These changes led to a significant upgrade in synthesis, with almost half the students scoring at the highest level, and also led to notable improvements in application of the research. Most of these advice columns, moreover, were written in a fashion that is more consistent with the actual genre and intended audience than in the first two years of the project (which still read more like literature review papers), so the construction of two different written products based on the same research seemed to help students better grasp the issues of genre and audience. These improvements notwithstanding, performance in “use of research” declined, suggesting that I need to help students better gauge the right level of detail when writing about research for a general audience. See Figure 3 below for a summary of performance changes.

Writing consultant utilization

In the first team-designed offering, we were surprised to see that the writing consultations were under-utilized. In fact, only one student actually visited the writing consultants, but that student reported that these consultations were the most helpful part of the process. My instructional partners in the Writing Center suggested that this under-utilization could be driven by the timing of the consulting sessions as well as the high specificity of the suggestions that the GTAs and I had provided on the drafts. Thus, we rescheduled the sessions to take place shortly after the students received feedback on their rough drafts, rather than as a preliminary/proactive step in the writing process (at which point students may not realize they need the consultation). We also restructured our reviews of the drafts to focus on evaluating the papers without resolving problems for them, and encouraged students to sign up for consulting sessions for assistance working through the problems we had identified. After these modifications we did see greater utilization of the writing consultations, along with improved written products.

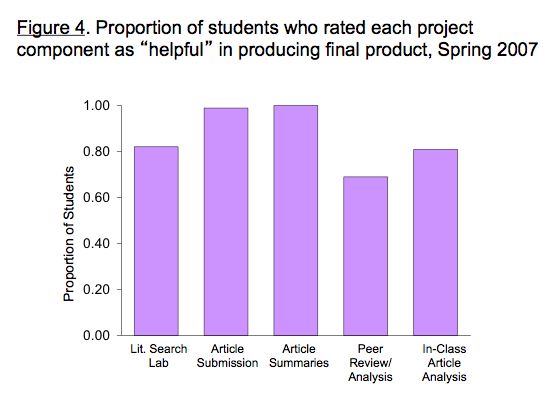

Student feedback

At the end of the first semester of the team-design approach, students completed an anonymous survey on Blackboard for a small amount of extra-credit. Seventy-two percent of the students completed it, and these students did not differ in their project grades from those who opted not to complete it. The survey asked open-ended questions about how helpful they found the individual steps of the term project and the supporting assignments. Overall, students’ feedback was very positive. Figure 4 summarizes the students’ responses to this survey.

The vast majority of students viewed the Literature Search Lab as helpful; those who indicated that it was not particularly helpful acknowledged that they had considerable experience in this domain already, and often used the time in the lab to find their articles:

In regards to the PsycINFO Lab, I was surprisingly impressed. Through the course of my studies I have used PsycINFO many times and assumed the lab would be boring and a waste of time. I was completely wrong. I can now use its resources more efficiently and have greatly improved my work in other classes based on a few techniques learned in the lab. Thank you!

The students almost unanimously felt that the staged approach, including submitting their articles for feedback and completing article summaries early in the semester, was very helpful. Interestingly, however, the Peer Review and Analysis component received mixed reviews. About one-third of the respondents reported that the peer-review process was not especially helpful, because their peers showed little interest or effort or were not mature enough to do this well:

The in-class peer review was pretty pointless. I didn’t get good feedback from my peers, most likely because they don’t really care about my paper.

I think the only thing that wasn’t very helpful was the peer review. I think that in this level of a class with many people who aren’t PSYC majors, the maturity is just not there to have a worthwhile peer review.

These comments were at odds with my casual assessment of the discussions taking place during this class session, but looking through all of the reviews, I did observe some variation in the depth of comments that students received. On the other hand, a majority of the respondents had a positive assessment of this component because they were able to get feedback on their own writing, and get a feel for what their peers were producing:

At first I was nervous about this day, because I was paired with two PSYC majors and I am not. In the end, I got some terrific feedback and it gave me more confidence that maybe I actually did know what I was talking about.

The in-class peer review and analysis of article summaries was my favorite part besides the rough draft and the whole editing process. This step not only allowed me to see what others thought of my writing and edit it, but I was able to compare my work with other peoples’ work, along with my article with other peoples&rsuo; article. It gave me some insight on how the writing should be conducted and put together along with what other people had. Reading and editing some of the other articles was great because it was interesting to see what they had pulled from their article that I hadn’t from mine, or what they didn’t pull that I did.

Examples of student work

Here are examples of a strong and a weak paper written during a pre-team-design semester of the course (Spring 2006). The sample A paper (pdf) provides a clearer and more compelling introduction to the issues and clearer descriptions of the research than the sample C paper. The sample C paper (pdf) also evidenced weaker writing mechanics and a minimal level of integration across the two studies, with commentary along the lines of, the two studies used different measures, but their results were similar. But even in the stronger papers, the typical synthesis (if present at all) involved shallow comparisons of the methods and results of the two studies, as can be seen in this sample A paper. Here, the only attempt to synthesize the findings of the two studies is provided on page 4. The student mentions some similarities in the goals and conclusions of the two studies and makes a brief reference to a difference in the major findings of the results: that the first study showed that there is a critical period (i.e., children acquire language more easily than adults), and the second study showed that there are no sharp age-related declines in language learning. The student does not attempt to reconcile these variations or discuss how the findings could fit together (e.g., the first study compared two groups of subjects whereas the second study provided a more precise examination of age-related change by looking at age as a continuous variable).

Here are examples of A, B and C papers produced by students after the team-based redesign work. As in previous semesters, papers scored at these different levels varied in terms of the clarity of the study descriptions, as well as the basic writing mechanics. But they also differ markedly in the level of integration across research findings. This first example of an A paper (pdf) was produced during the initial team-design offering, during Spring 2007. This paper illustrates a high level of integration across the research findings presented, particularly given that the students in this semester were required to use three rather than two sources. The syntheses provided in this and the other A papers this semester more closely mirror an expert’s performance in a literature review. Indeed, this paper seems to be written more in the style of an academic literature review than an actual advice column, and this was quite typical of the papers produced during the early team-designed offerings. The overall quality of the A papers continued to improve following additional iterative course modifications.

This sample A paper (pdf) from the Fall 2010 offering shows much greater adherence to the genre, as well as a high level of research synthesis. This student observed that the four studies examined the relation between infant sign language and spoken language development using different methodologies, and then used that that information as an organizing theme throughout the paper. Much of the synthesis was woven into the transitions between study descriptions.

Similarly, the author of second A example (pdf) from Fall 2010 identified multiple points of contrast between four studies related to the sleep and cognitive development topic and integrated these contrasts into the discussion of the studies. Her final conclusions show how the four sets of findings have different, but interrelated, implications for the real-world issue.

In contrast, in the B example (pdf) from Fall 2010 the student attempts to make some connections between the findings of the four studies by mentioning some of the similarities and differences between them, but does not go beyond this to discuss how the findings could complement each other or whether there is some reason to place more weight on some findings than others. The C example (pdf) does mention generalizations from the research on baby sign language (e.g., “A significant amount of research has shown that...” and “reviewing all of the research, it is evident to me that...”) but does not provide a convincing synthesis because s/he provides very little review of that research.

I was very happy with students’ improved information literacy skills and the increased level of synthesis evidenced in student work after the most recent, team-designed course modifications. Student work is more closely approximating the types of upper-level work that I think should be exhibited. Therefore, this is an approach that I will continue to use. Furthermore, my current approach represents an accumulation of many small changes that I have made across several years of teaching and “tweaking” this course, which has made the course refinement process quite manageable.

However, there are still some areas that need further refinement and exploration. First, I am intrigued by the fact that a large subset of the students did not value the peer-review process. Although some of them may not have received high-quality feedback from their peers, it is also possible that they do not have the meta-cognitive awareness to understand what they were getting out of this activity. Similarly, about 20% of the students indicated that the in-class article analyses (of articles unrelated to the project) had not helped them on their term project, even though they believed it had helped them learn the relevant course content. In future offerings, it might be helpful to make these connections more explicit to the students.

Another issue is that the number of assignments in the most recent iteration of the course was overwhelming to some students. Furthermore, students are sometimes “shocked” at the grades they receive on early versions of their written work. Although I want to continue to provide sufficient opportunities for students to develop and practice key skills, I am still working to try to identify the right number of assignments, to streamline the staged assignment process, and to help students view the progressive and iterative assignments as skill-building. It may be helpful for me to consult with former PSYC 430 students to gain greater insight into the student experience in the course.

All in all, while there is still room for improvement, the instructional partnership and scaffolding were successful in that we were able to get the students to perform well on a more challenging assignment. The instructional partnership enabled me to increase the resources and support available to the students without a significant increase in my own (or the GTA’s) workload. Furthermore, the students also seemed to enjoy the staged approach to the assignment. Hopefully, this process has compelled students to find support outside of the classroom in the future; it would be interesting to see if students who took this course are more likely to take advantage of resources such as the Writing Center and the Libraries in the context of their other courses. I would also like to know whether they are able to generalize writing, critical thinking and application skills developed in this course to other courses as well. Finally, another benefit was that the graduate students who served as GSFs made significant leaps in their own teaching skill and knowledge that will serve them well as future faculty.

Contact CTE with comments on this portfolios: cte@ku.edu.