Rethinking Exams

Below, you’ll find the following:

- Is an Exam the Right Tool?

- Exam Design Considerations

- Non-traditional Elements to Try

- Skill Level and Tuning

- Examples

- References and Resources

Is an Exam the Right Tool?

Before writing an exam, pause first to consider if an exam will serve learning while honoring your boundaries.

To Exam or Not To Exam?

Why choose an exam? A more useful question may be: “In what ways could my exam give students practice with skills they will need?” Social workers, dramatists, lawyers, historians, geologists, linguists—most people, in fact—usually don't spend their days answering multiple choice questions about their discipline. Traditional exams—though often familiar and sometimes useful—can be artificial exercises for students and instructors alike. For students, an exam may be a missed opportunity to practice with skills they’ll use. For instructors, non-exam formats allow them to better ascertain what students are capable of. If it’s possible to conceive of an activity that professionals in your discipline do and that can also be evaluated to determine a student’s learning or growth, consider having students do that instead.

There are, of course, reasonable and balancing arguments in favor of exams. For professional, licensed degrees, traditional course exams may serve as practice for professional exams. (That said, there are still myriad skills those students will need to practice after clearing the licensure hurdle, and these skills are worth housing in courses in a practiced and capturable way.) Achieving authenticity in learning or its demonstration can also be made difficult by the balance of students to resources. It may not be feasible for a lone instructor to critique 100 original artworks or long-format research papers; a multiple choice or short response exam on the topic becomes an attractive option. In some cases, safety may also be a concern where authenticity is concerned. Scientific laboratories, social work, etc., where supervision is often required, may not be ideal venues for novice students to show their capabilities.

Exam Design Considerations

If an exam feels like the right balance of authenticity and logistical care, there are a number of design principles to consider before constructing your exam.

Things to Think Through

What is it that students should be able to do? Practitioners of “backward design” can easily refer to their course, unit, and lesson goals for a ready list of skills and knowledge they can expect students to demonstrate. If course skills and content are meaningfully organized into units, the scope of the exam may be predetermined. With or without outcomes or course structure to refer to, it will be useful to think through the top 3 to 5 things the exam is about. While you can keep some questions for breadth, keep most of the questions within your priority list. Even better if you can communicate to students in advance how they might focus their studying.

What specifically are you trying to capture about learning?Be mindful of what you hope to learn about student learning and construct the exam accordingly. Consider: If an engineering problem requires students to use specific software to solve a problem, what do you, as an instructor, need information about: the student thought process, their facility with the software, or both? If the answer is both, are there ways to deconvolute the problem? That is, can you ask about the core concepts of the problem independently from the software? This would provide the instructor a sense of how much students understand the engineering concepts vs. how much students understand how to work the software. This type of information can then be used to inform future instruction.

What is the scope of the exam? Is the exam focused on recent efforts, or is it comprehensive in nature? (Research suggests that when people encounter information or repeat tasks in a recurring or "spaced" way, it sticks in their brains longer and more reliably.) Decide, and commit to the plan. Relatedly, many instructors are enticed by the notion of “transfer” – seeing if students can apply skills and knowledge in unfamiliar contexts or extend ideas learned in the course in novel ways. While exams are a place instructors may do this, they should do so thoughtfully. Have students had multiple opportunities to practice with transfer previously? Has transfer been made explicit to students throughout the course and again in advance of the exam? Have they received feedback on their previous work with transfer? If the answer to any of these is no, reconsider. Without proper preparation and communication in the course, these kinds of exams can be surprising and unfair to students.

What have students practiced? And just as importantly: How? A general recommendation is to assess what students have practiced. If students have practiced calculating a definite trigonometric integral, asking students to approximate the value of a trigonometric integral from a graph may not be appropriate, particularly if they’ve had no practice doing so. If students practice sightreading in class, don’t ask them to transcribe a tune they hear as part of an exam. Did your students create a family tree of ancient Greek deities? Have them do the same on an exam. Like comments about transfer above, if you intend to get at the same core idea or skill but in a different format, you might communicate that fact to students in advance.

Consider, too, the time that an exam will take; this will vary by course format. A common “rule of thumb” is that an in-class exam will take 2.5 – 3 times longer than it will take an expert. Consider having a colleague or a teaching assistant “take” a draft exam and measure how long it takes them; adjust accordingly. For online courses, and especially those that are asynchronous, it can be more difficult to be mindful of the temporal limits of an exam. The rule of thumb applies to online courses, too. It can be tempting to view an online course as an opportunity to give students additional practice or work, but keep in mind that online students juggle the same things as in-person students—and often more. Keep the scope of the exam focused on your goals and be mindful of the time it will take.

Where are your students taking the exam? In addition to advantages, what constraints might the environment impose? Many instructors consider only how an environment might facilitate academic dishonesty. How might the environment challenge students trying to demonstrate their knowledge? Especially in large courses with longer (often night) exams, think ahead to how you will handle bathroom requests. Keep a piece or two or candy on hand for students who face blood sugar issues. If you use projected speaking or imagery during the exam, have a method to handle this for students who have difficulty hearing or seeing, respectively. And, of course, many students have various accommodations that are often handled through a testing center. Be sure to communicate with testing center staff, who may have additional questions about what is and is not permitted during the exam. In summary: Think ahead, communicate plans, and have contingency plans. The interaction between needs and environments is complex.

An exam is another opportunity to learn. However, this is only true when 1) students receive feedback on their efforts and 2) have the chance to try again. Both are important. One popular option for this is the two-stage exam, in which students take an exam individually and hand it in, before receiving a fresh copy of the exam that they work as a group. In this model, feedback on the first attempt comes from their peers during the second group attempt. In another approach, the instructor indicates which responses are incorrect and returns the exam to students, who then explain the incorrect (and/or correct) answers and resubmit the exam to the instructor. Online searches can reveal several creative options for feedback and reattempt approaches, or instructors can develop their own.

Non-traditional Things to Try

Have the itch to move away from a traditional format without committing to a full fledged project? There are several ways you might breathe new life into your exams. Many strategies involve student-student or student-instructor interactivity.

A Few Ideas to Shake Up Traditional Exams

Create "meaningful disruptions" or "lifelines" during the exam. Indicate to students that they will be interrupted during the exam for an activity meant to help them. Ask students who finish early to remain seated until after this point in the exam. Further instruct them to look through the exam questions in their entirety before beginning the exam in earnest. This sets the stage for any of the following to occur a third to half way into the exam:

- Voting for Help: Prepare 3-5 slides—one each for the top 3-5 most challenging problems—and ask students to vote on the problem they'd like added support with. (Voting could occur via clickers, color cards, show of hands, etc.) Based on responses, pull up the slide for that question. The slide might have 1-3 hints (usually addressing common sticking points or misconceptions), specific points to address in a written question, or perhaps an outline of steps in a difficult multi-step problem. While this may not address everyone's biggest concern, it tends to help the most students with their sticking points at a time when they're invested in getting this type of support and can better learn from it. While dependent on room setup and technology, there has been success with displaying the slides for the top two or three questions, helping an even broader audience.

- Discussion Break: Allow 3 to 5 minutes of open-conversation. While surprising, very few issues have occurred with this method in courses that have used it. The key is communication. Here's how it goes: Prepare four slides.

- The first is a written mirror of your spoken announcement: The exam is being interrupted for good reason.

- The second is about adhering to the rules: If the activity doesn't go well or is abused (i.e., students don't adhere to the rules), the activity won't be used during future exams. On the slide, post the rules, also explaining them: At the sound of a bell, buzzer, etc., students will have 3-5 minutes to discuss whatever they want with fellow students—no tech, no TAs or instructors, and only for the time limit, which will end at the next sound of the bell, buzzer, etc.

- The third slide says "Go!" and includes a timer they can see; sound the bell. When time runs out, click ahead.

- The fourth slide says "Stop!" and should be accompanied by the tone. Your verbal announcement to stop, along with language about returning to the exam as normal, should suffice.

Passing Notes: Before the exam, distribute large index cards to students. Like other methods, this works only with good communication. Announce the interruption to students: "You have a minute to write your name at the top and one question you have about one problem on the exam. Use good handwriting—you want this to be legible." After that minute, tell students to pass their cards to the classmate to their right. Tell students to write their name below the question. Then, for 1 minute, they can neatly write a response to the question on the card in front of them. It will be in their best interest to try to address it. "I don't know, either" isn't as helpful as "I think I remember X, but I don't know how it fits" or "Here's how far I got using this strategy." Then have students hand the card back. Repeat, this time having students pass their cards to the student to their left. They should write their name below the prior response. They can spend 2 minutes and can comment on both the original question and the first response. After time is over, they hand the card back to the original student. At that point, the original student should have new information to weigh in relation to their sticking point.

Randomizing questions or formats to add interest and involvement: Many disciplines have complex but mechanical skills that can be applied to many items: Labeling muscles on a diagram in an anatomy class. Drawing Lewis structures from chemical formulas in chemistry. Explaining how two (seemingly disparate?) historical government documents relate to one another. Rather than just putting a couple questions on the exam, identify several that you can envision using. Create a list or table of these. Then associate these items with some type of randomization: numbers on a 20-sided die, cards in a deck (or a subset of a deck), etc. Before the exam, draw , roll, etc.—or have students do this!—the particular items on the exam. For example, a student rolls a 20-sided die three times, resulting in a 2, 6, and 15. Looking at the correspondence list, this means the students must identify the sartorius, orbicularis oris, and flexor digitorum profundus on a diagram during the exam. As another example, perhaps students draw a jack of spades, 3 of hearts, and a 9 of hearts, requiring them to draw chemical structures for CH3OCH3, SO32-, and C2NH8. Imagine a history class: "Oh, man. That red marble and yellow marble that Tiffany rolled means I have to connect the Bill of Rights to the Social Security Act? Oof..." You get the idea. This works best when students have the comprehensive list of items in advance.

Have students create all or some of the exam questions. Each student is in charge of designing one more or questions for the exam. To make this a deeper learning experience, students should provide a correct answer and provide an in-depth explanation of it, as well as identify how their question has the potential to uncover or dispel misconceptions. In advance of the exam, make sure students get feedback on their questions and answers, allowing the instructor to correct misinformation. Then communicate to students that the exam will house all or some of the questions and constituting all or some of the exam. The idea here is two-fold. Each student has studied at least some facet in more depth. Additionally, this typically gets students collaborating (or at least exchanging information) more leading up to the exam.

Have students work the exam individually, and then in groups. This exam format, developed by Wieman, has gained considerably traction in the last decade. In it, students are handed a copy of their exam to complete on their own (as in a traditional exam.) Students then hand in their individual copy and are given a new copy of the same exam to complete within a group. In this model, they get their first round of feedback from their peers, as students discuss the questions in their groups. Groups then complete the exam together and hand it in. (If you've ever seen students waiting for each other in the hall during an exam, so they can talk about it, this strategy leverages that in a structured way to promote learning during the experience.) Note that well designed two-stage exams include some questions that are difficult enough that no one student in the group portion can answer the question alone (i.e., serve as the "go-to" person for the answer.) Each student receives a score that is a composite of their individual and group scores.

Skill Level and Tuning Exams

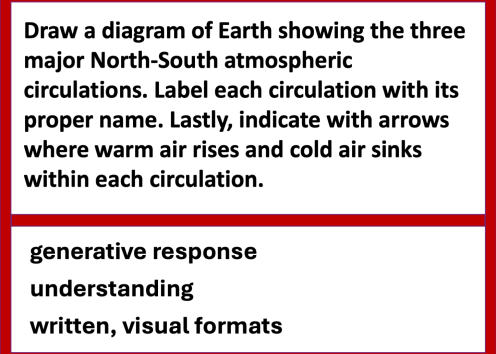

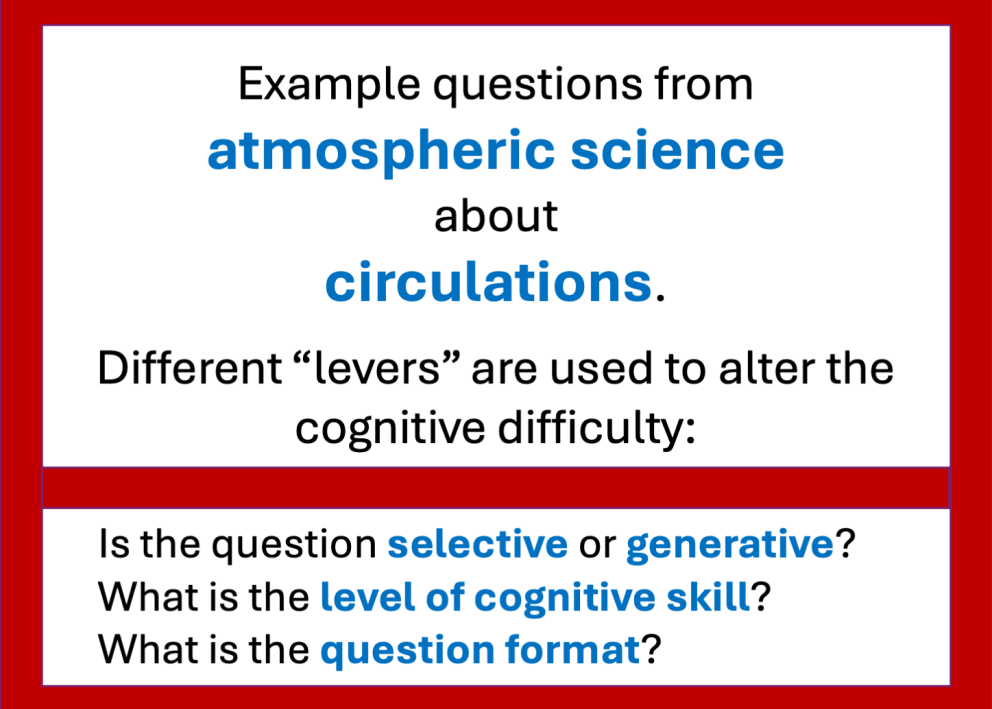

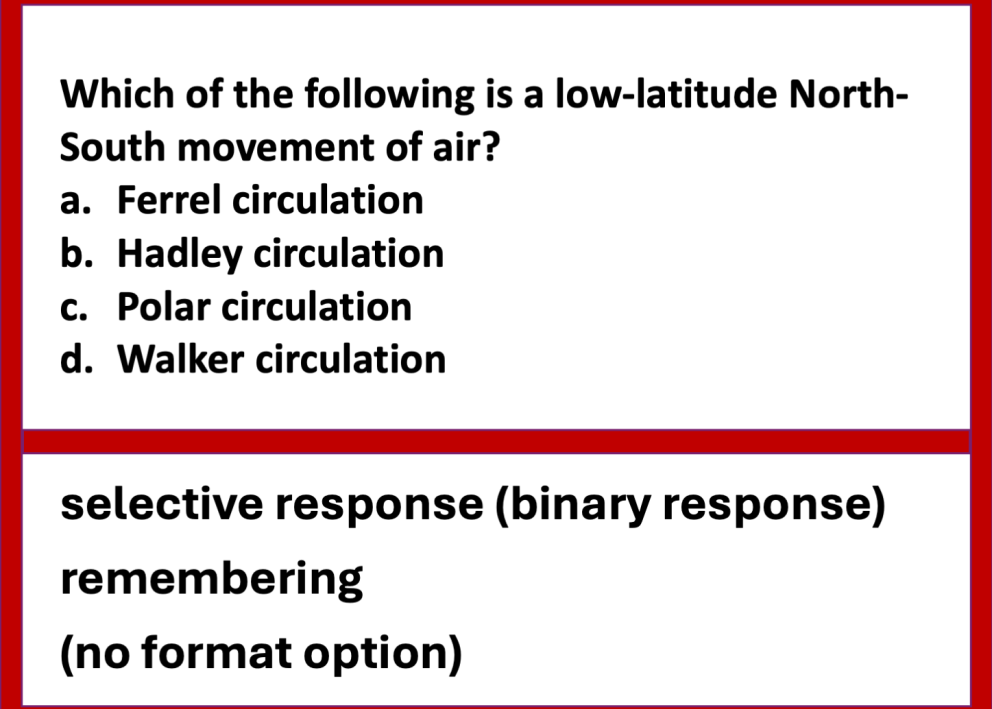

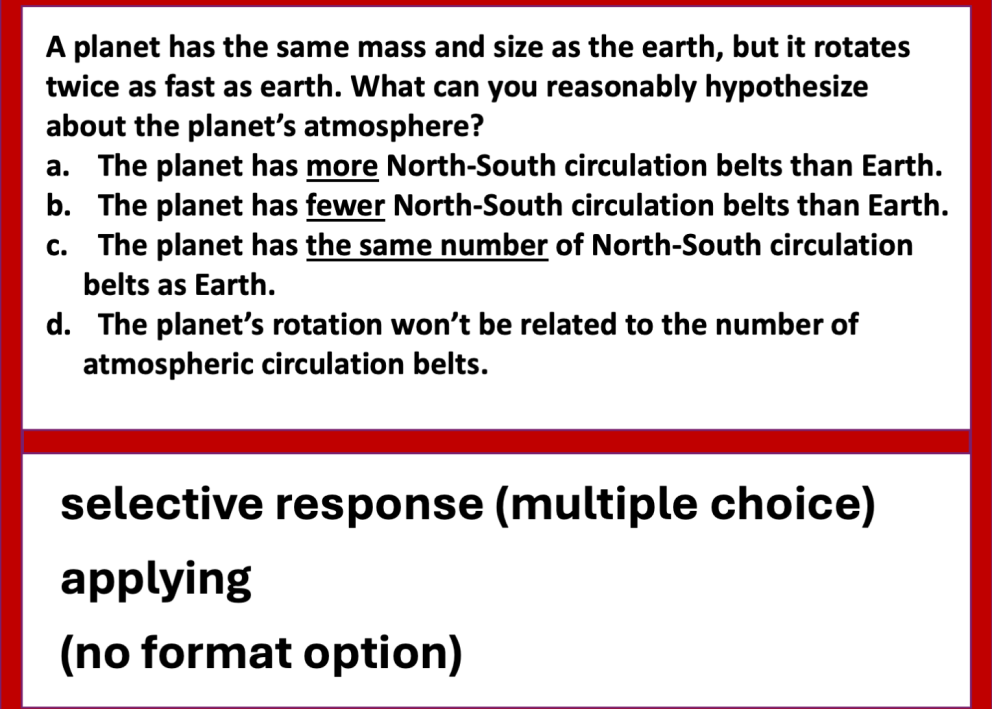

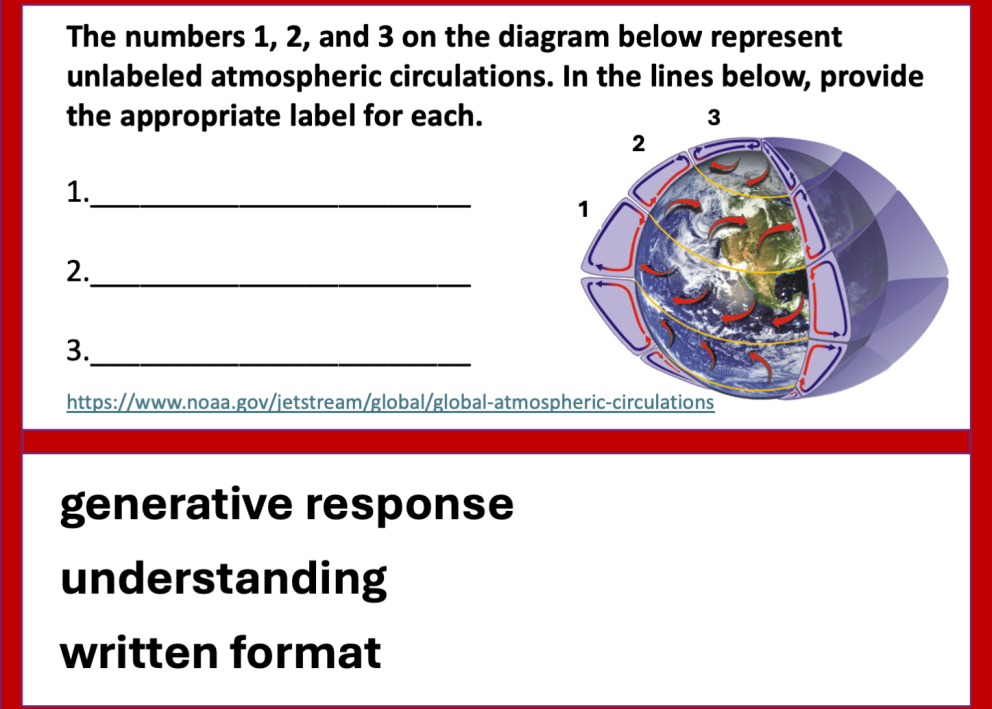

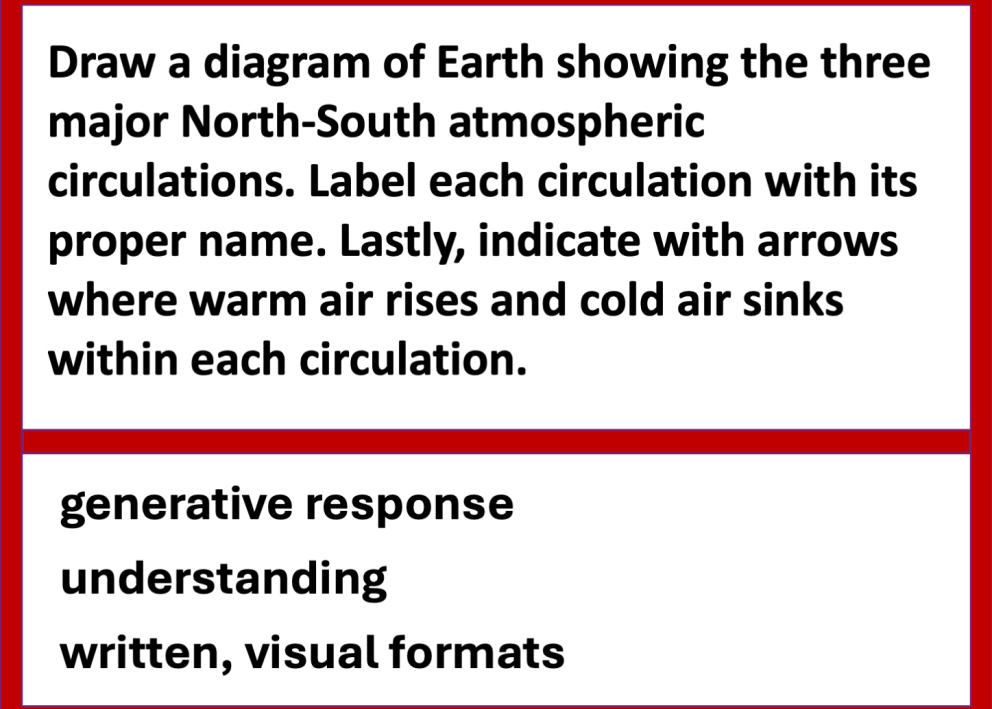

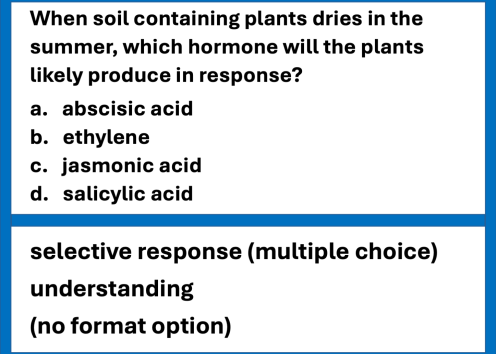

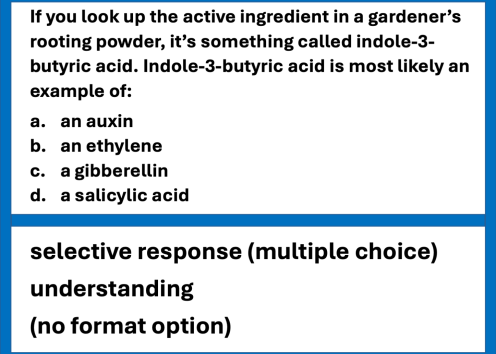

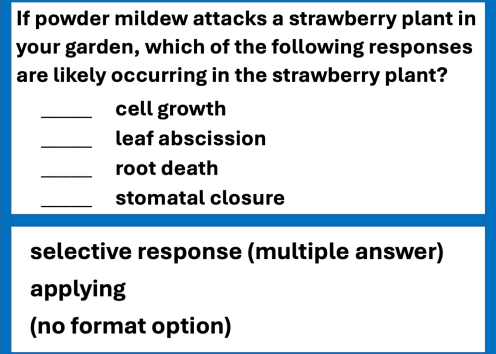

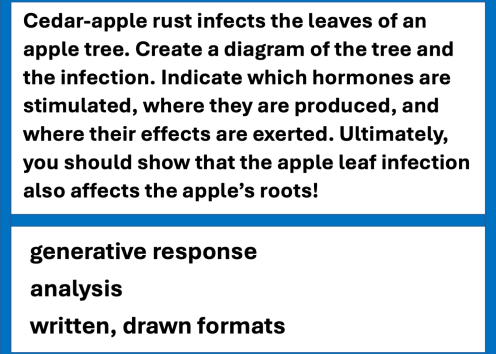

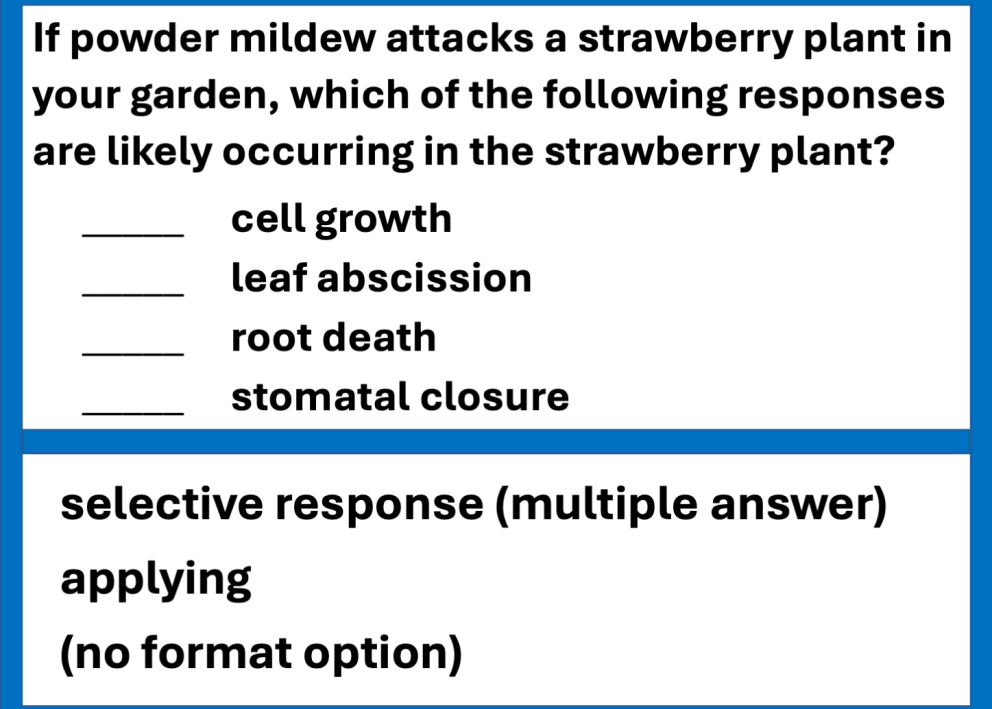

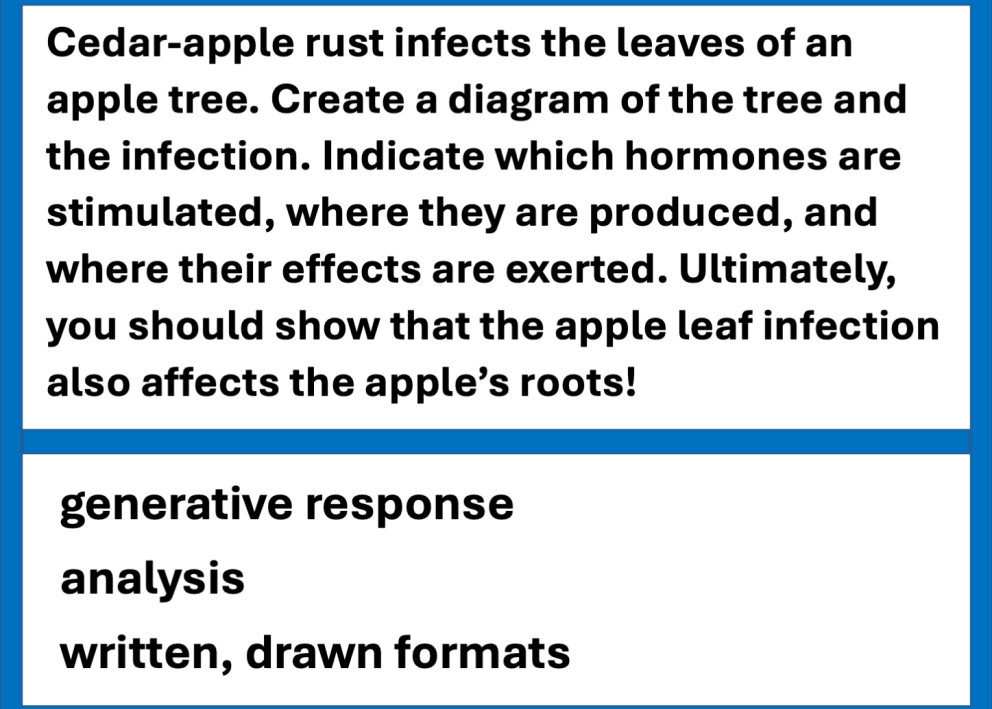

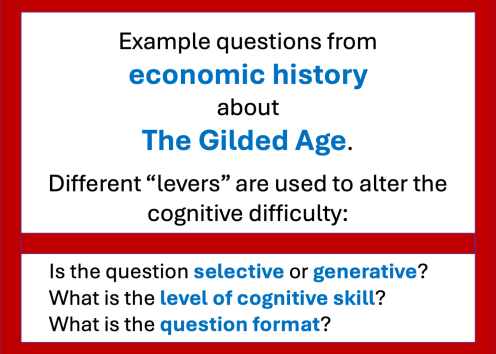

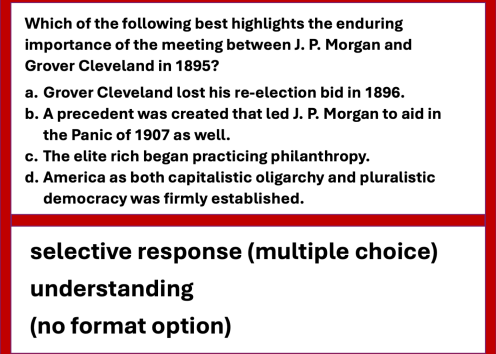

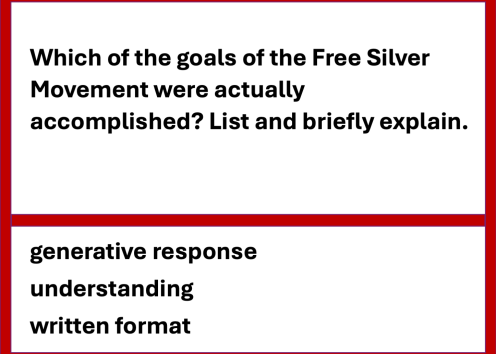

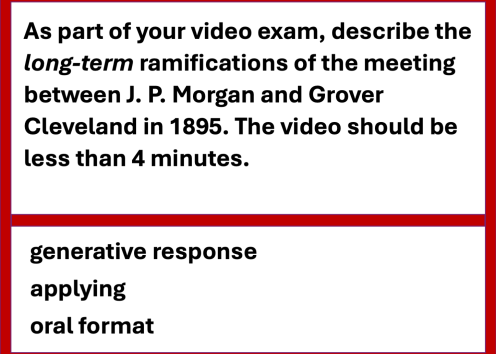

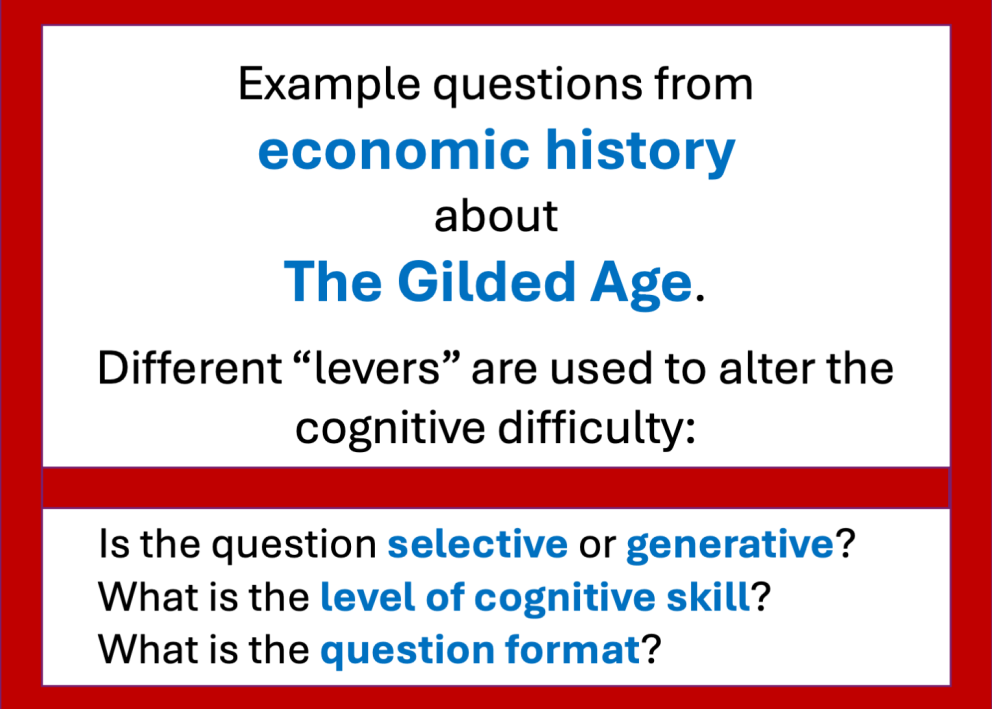

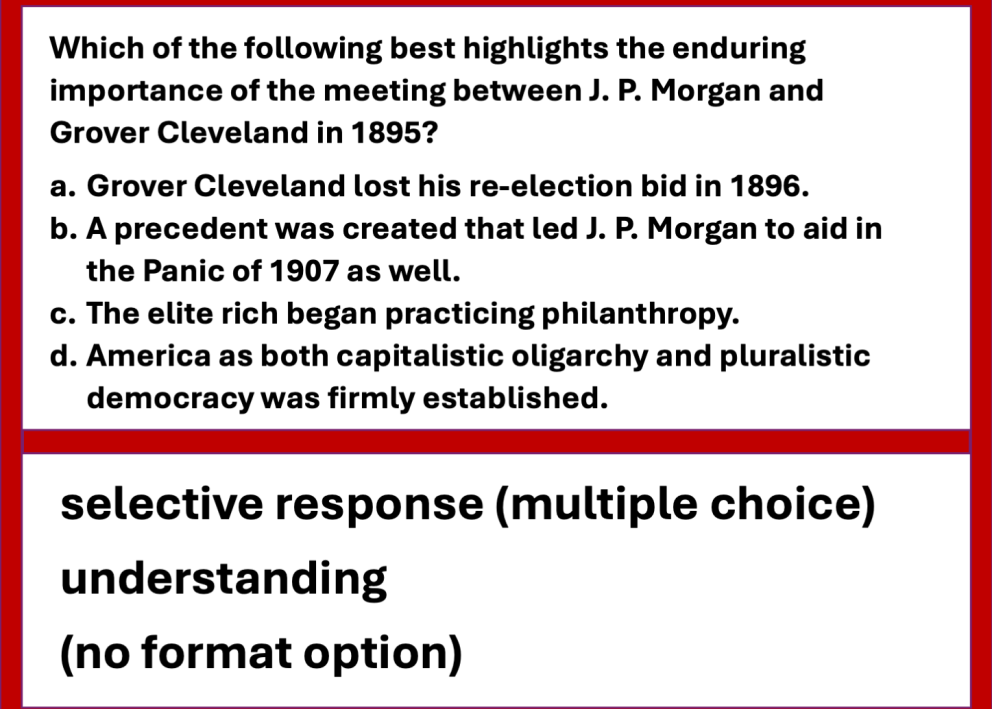

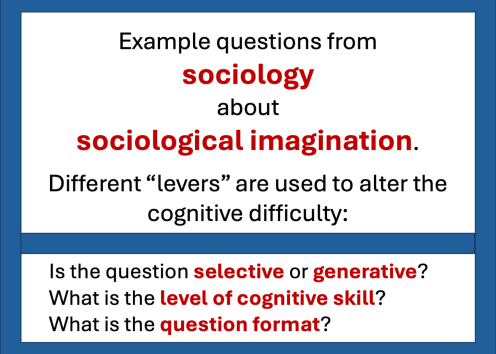

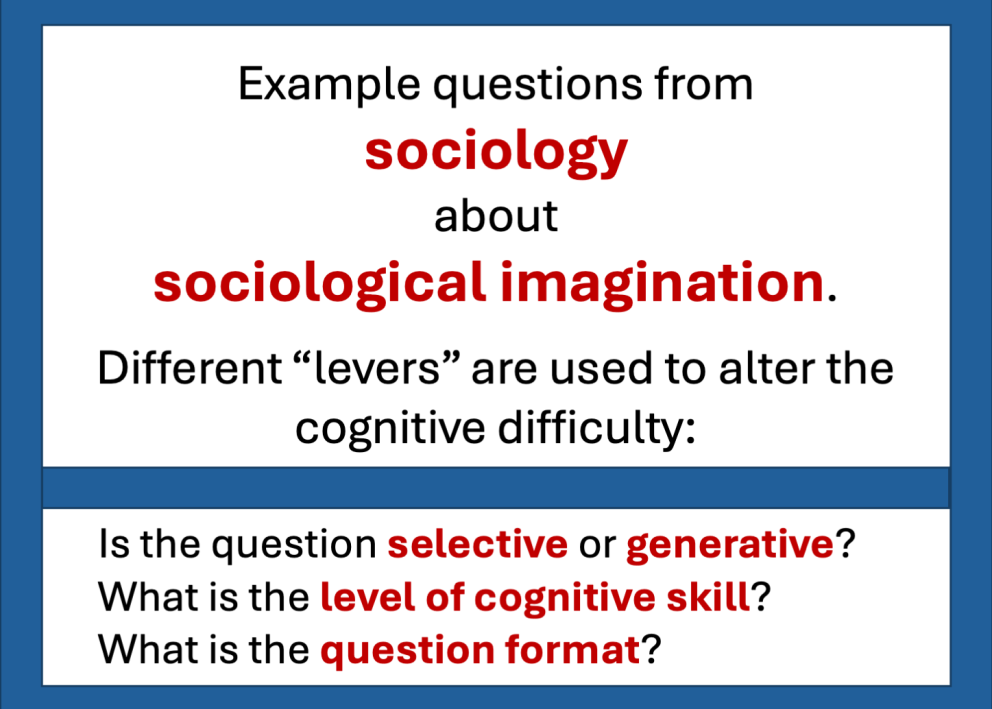

Having decided an exam is the best way for you to provide students practice and capture their learning, there are several levers at your disposal that adjust the level of difficulty.

Skill Level and Tuning

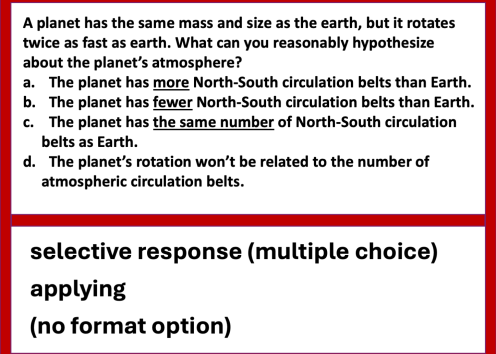

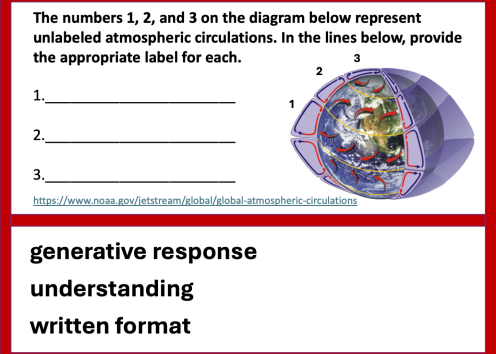

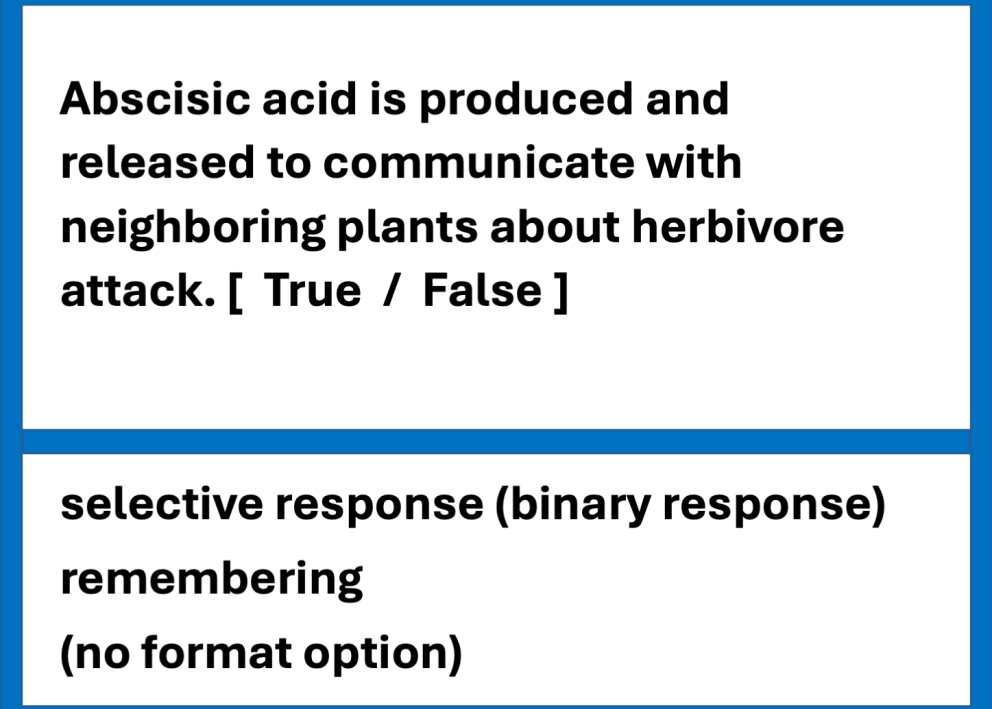

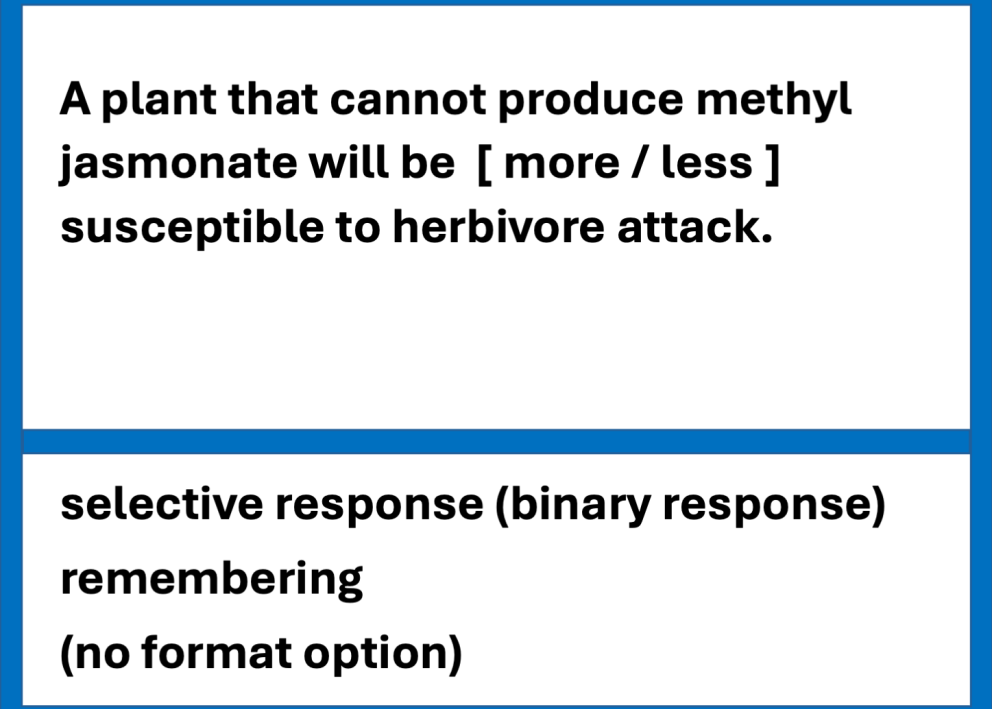

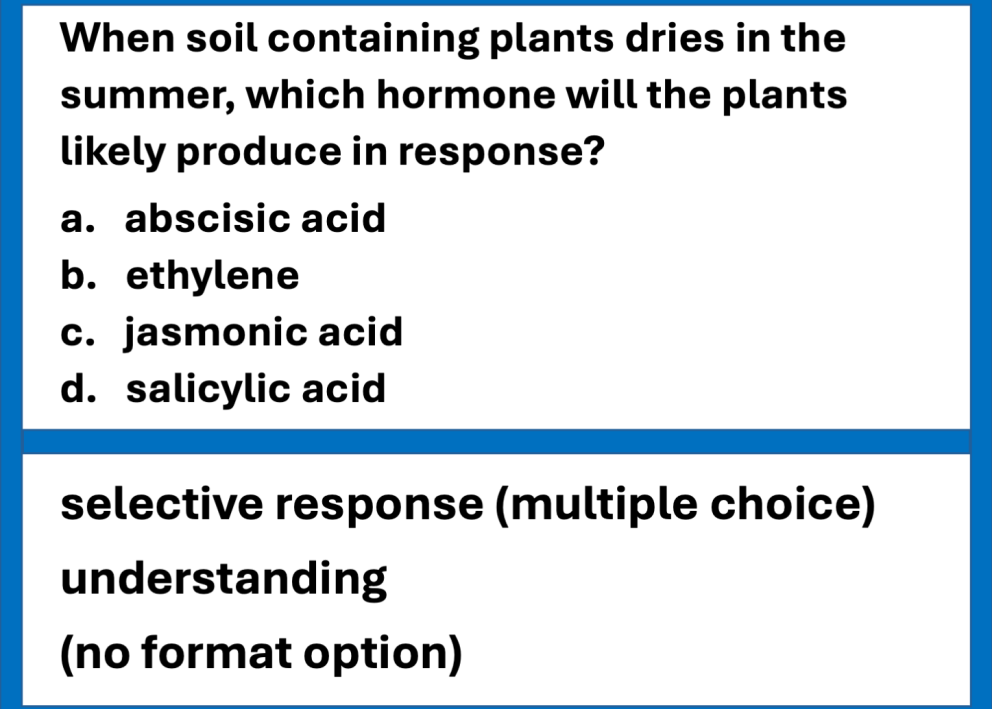

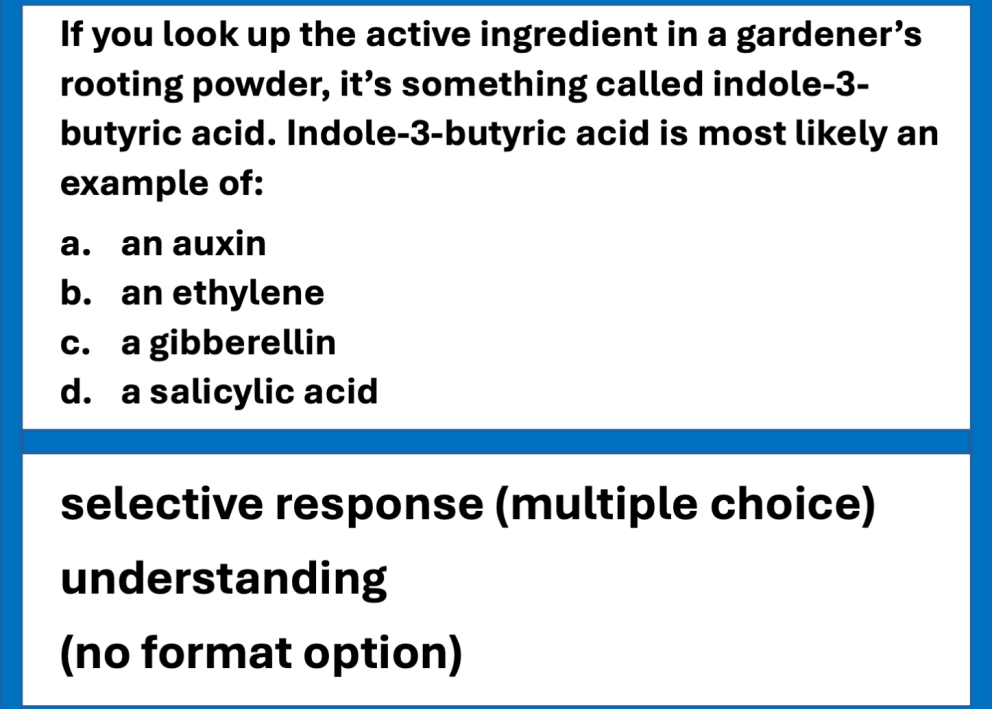

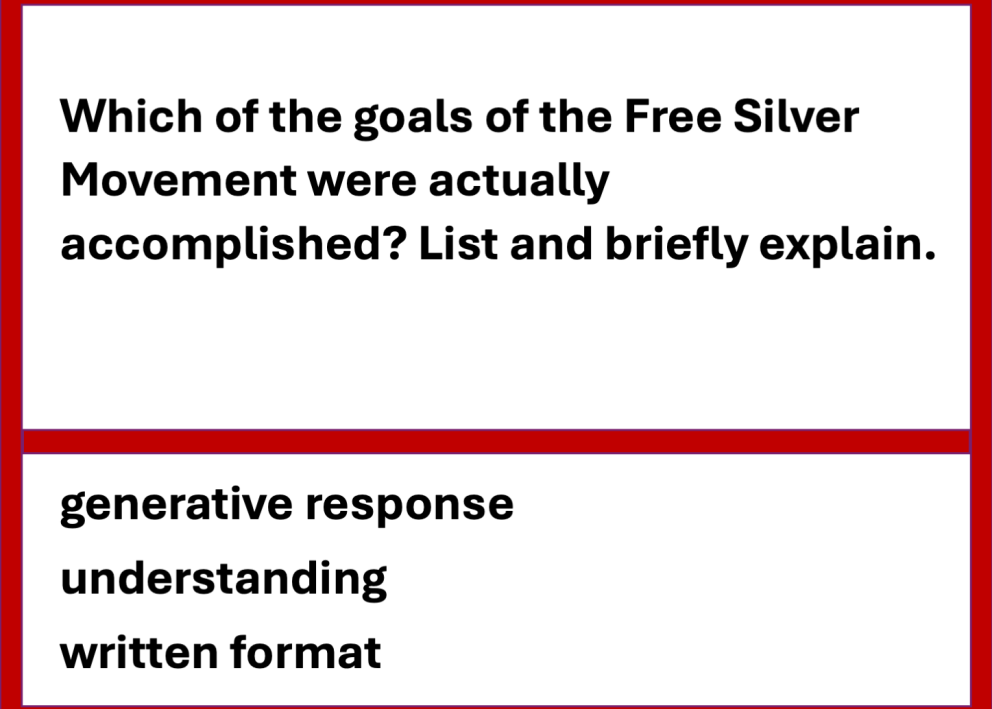

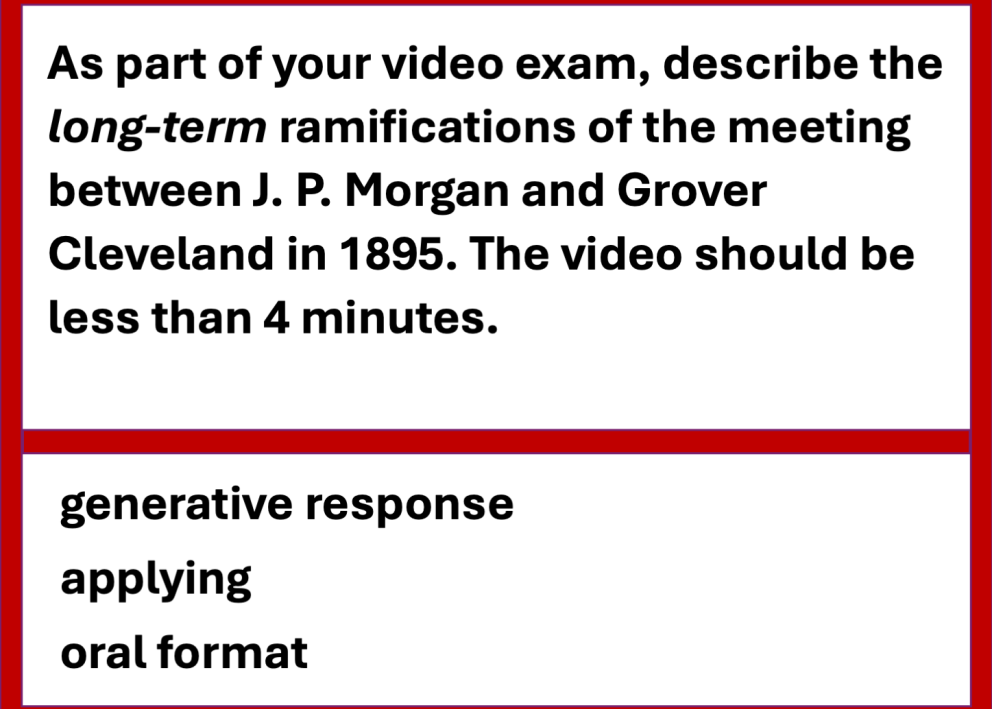

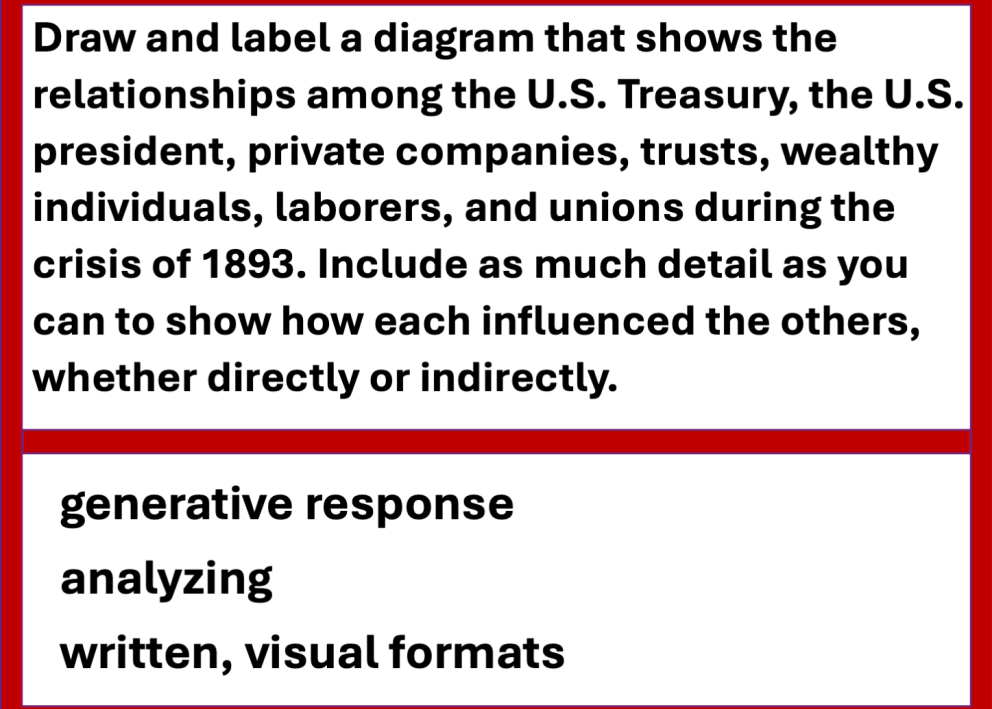

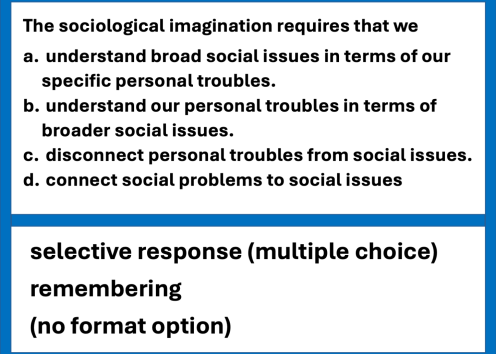

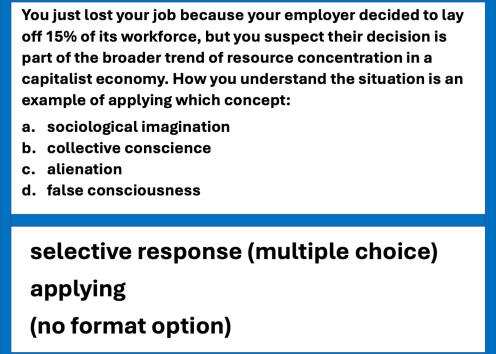

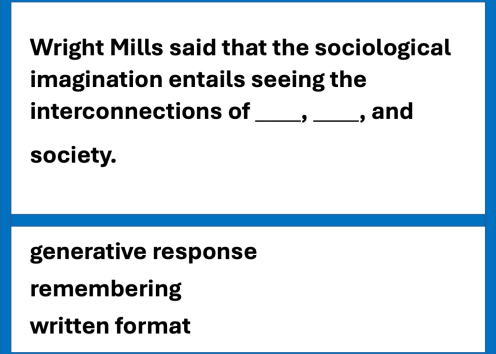

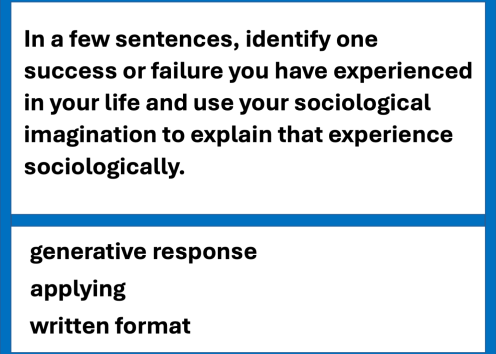

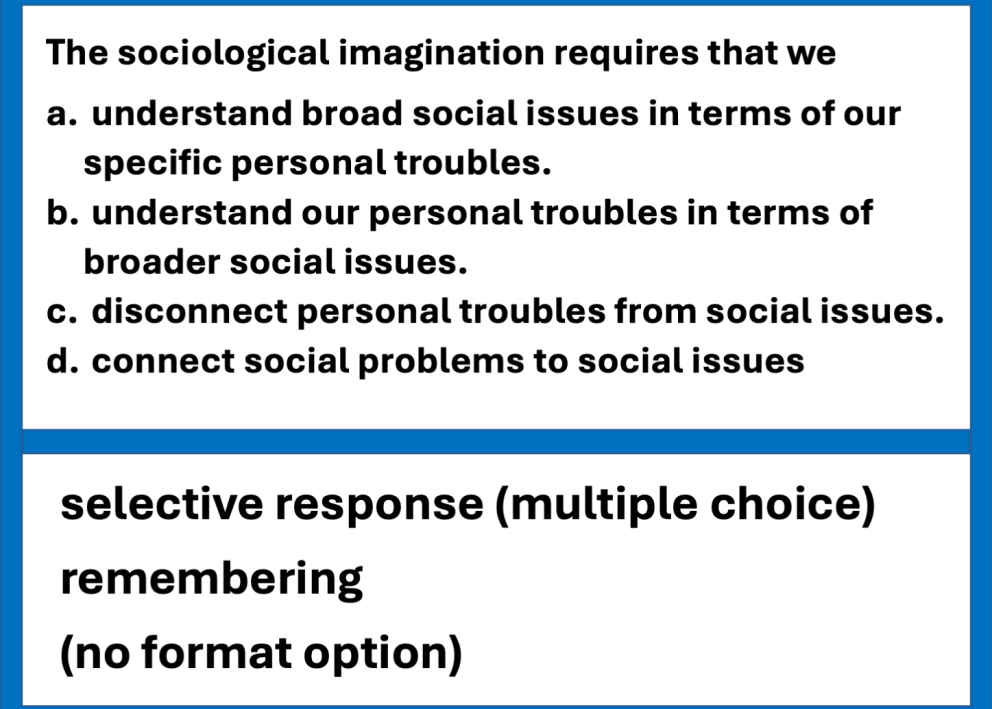

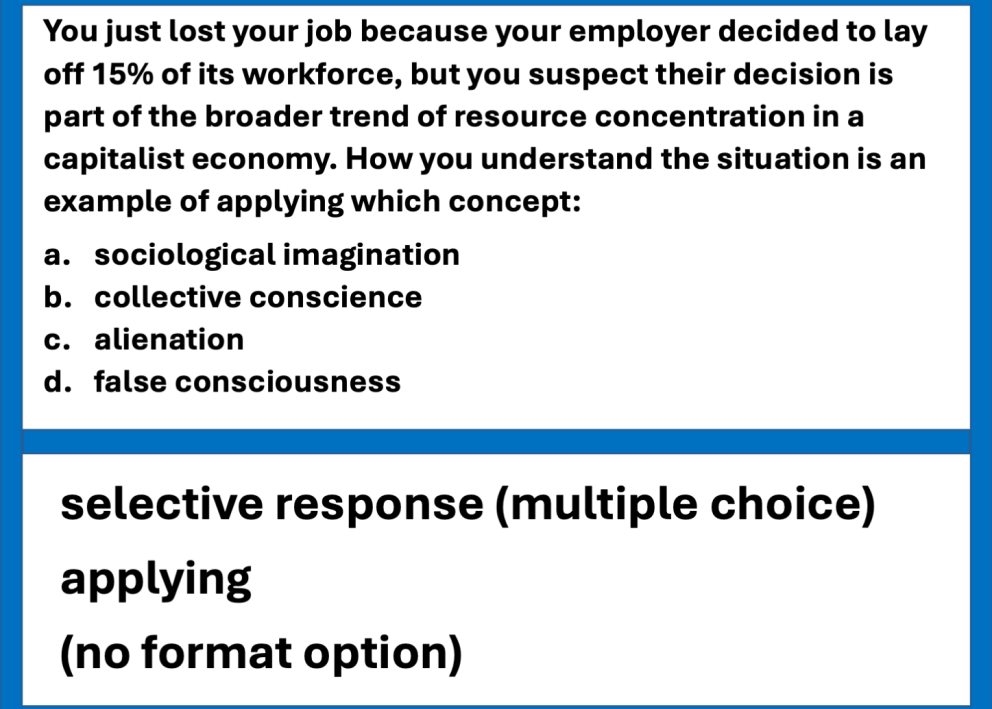

Selective response questions* generally require lower cognitive load than generative response questions** because, all else being equal, finite possibility are presented in selective response questions, which decreases the retrieval of information. The brain doesn't have to do as much work "searching" for the answer. This affords a simple and powerful way to adjust the difficulty of an exam: exchange selective response for generative response to increase difficulty; replace generative response with selective response to decrease difficulty.

*selective response questions are those in which students select an answer from a list of options. This includes things like dichotomous choice (true/false, yes/no, opinion/fact, etc.); matching; multiple choice; multiple answer; ordering/ranking; etc.

**generative response questions are those in which students must produce the information themselves. This includes formats such as fill-in-the-blank, labeling, listing, short answer, essay, etc.

While often thought of in terms of learning outcomes, many taxonomies of learning can help instructors think about difficulty in specific tasks, including those that appear on exams. These taxonomies attempt to divide learning into categories, and many are also hierarchies: The categories of learning are ordered by the level of skill they require. Like any proper hierarchy, lower-level items in the hierarchy support higher ones. (Inversely, higher-level learning is predicated on lower-level learning.) Because of this, hierarchies of learning can be another way to adjust the difficulty of tasks posed to students. Here are some examples:

- Perhaps the class isn't at a place where it could generate a new argument during a timed exam, but you're interested in seeing their skill in argumentation. Would students be able to discuss the merits of arguments you present instead? Would this allow you a glimpse of their skill in this area that's also in a faster way and in line with their previously practiced abilities? That is, you might exchanged the "create" level for the "evaluate" or "analyze" level to decrease difficulty.

- An introductory course doesn't need to be just definitions. You could ask, "What factors contributed to the high death toll of the 1906 San Francisco earthquake?" This is something students could remember from bullet points in class; recall is typically a low-level (and therefore relatively easy) skill. Perhaps, though, you want to challenge students. By asking students to do something with same knowledge, you increase the difficulty substantially. "If an earthquake of the same magnitude as the 1906 event happened today, would the death rate in the city be higher or lower? Why do you think so?" This reformulation requires remembering, understanding, application, and analysis. (What were the factors leading to death in 1906? Have those factors been addressed by policy and modern engineering? As a rate--not a number--how do these modern improvements square with increases in population?) That is, you might exchange the "remember" level for the "apply" or "analyze" level to increase difficulty.

The University of Illinois Chicago has a thorough treatment of Bloom's taxonomies for three domains of learning (cognitive, affective, and psychomotor). Notably, each of Bloom's taxonomies is also a hierarchy. Reproduced in brief, these are:

- Cognitive Domain: remembering, understanding, applying, analyzing, evaluating, creating

- Affective Domain: receiving, responding, valuing, organizing, internalizing

- Psychomotor Domain: perceiving, acting, practicing, sustaining, combining, adapting, originating

There's a great summary of Anderson and Krathwohl's Two-Dimensional Taxonomy (Hierarchy) at Iowa State University. A link will take you to a representation of the 2D taxonomy.

Arizona State University has an excellent Taxonomy of Digital Learning article. This may find special use in online courses, where student tasks are often digital in nature.

Fink's Taxonomy of Significant Learning is well represented online by the University of Buffalo. This combines elements of cognition and affect in a way that promotes robust and durable learning.

An exam is another opportunity to learn. However, this is only true when 1) students receive feedback on their efforts and 2) have the chance to try again. Both are important. One popular option for this is the two-stage exam, in which students take an exam individually and hand it in, before receiving a fresh copy of the exam that they work as a group. In this model, feedback on the first attempt comes from their peers during the second group attempt. In another approach, the instructor indicates which responses are incorrect and returns the exam to students, who then explain the incorrect (and/or correct) answers and resubmit the exam to the instructor. Online searches can reveal several creative options for feedback and reattempt approaches, or instructors can develop their own.

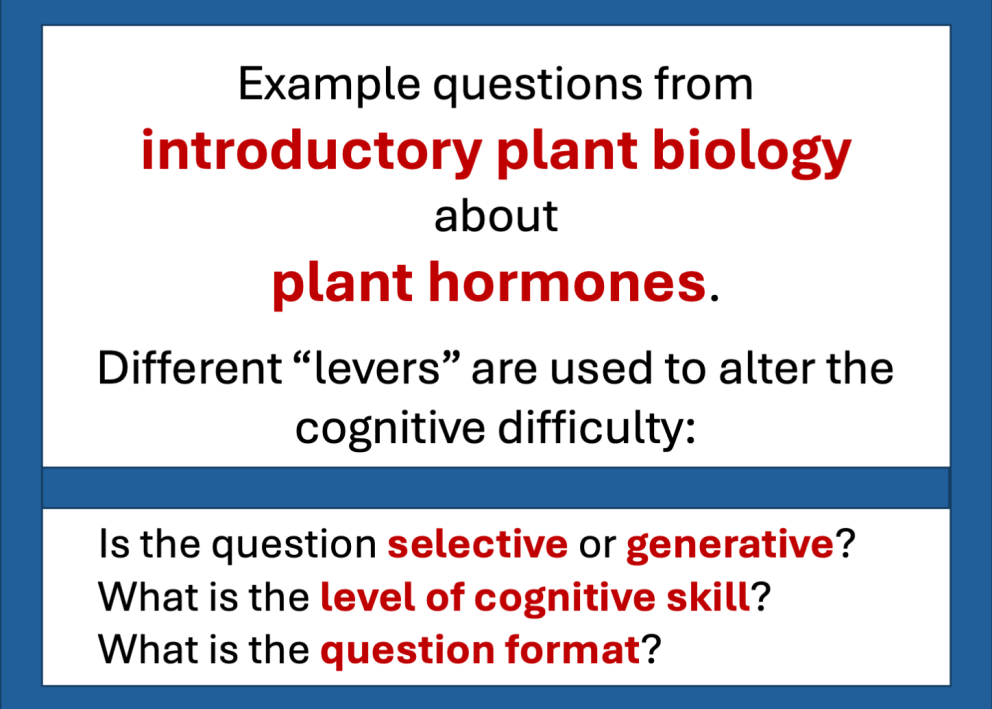

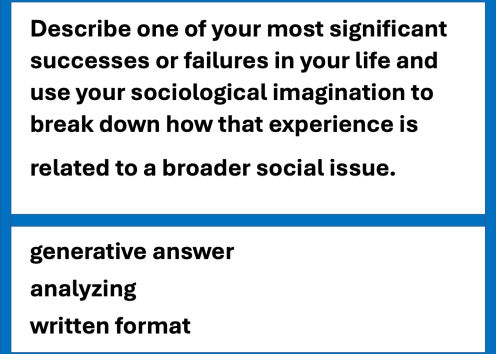

Examples

Here are examples of how an instructor can take a particular topic and use the levers discussed to adjust difficulty.

References and Resources

Anderson, L.W. (Ed.), Krathwohl, D.R. (Ed.), Airasian, P.W., Cruikshank, K.A., Mayer, R.E., Pintrich, P.R., Raths, J., & Wittrock, M.C. (2001). A taxonomy for learning, teaching, and assessing: A revision of Bloom's Taxonomy of Educational Objectives (Complete edition). New York, NY: Longman.

Bloom, B. S. (Ed.), Engelhart, M. D., Furst, E. J., Hill, W. H., Krathwohl, D. R. (1956). Taxonomy of educational objectives: The classification of educational goals. Handbook 1: Cognitive Domain. New York, NY: David McKay Company.

Fink, L. D. (2013). Creating significant learning experiences: An integrated approach to designing college courses. San Francisco, CA: Jossey-Bass.

Harrow, A. J. (1972). A taxonomy of the psychomotor domain: A guide for developing behavioral objectives. New York, NY: David McKay Company.

Heer, R. (2012). "A Model of Learning Objectives–based on A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives" Center for Excellence in Learning and Teaching. Retrieved Dec. 4, 2024 from https://celt.iastate.edu/prepare-and-teach/design-your-course/blooms-taxonomy/

Krathwohl, D. R., Anderson, L. W. (2001). A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom's Taxonomy of Educational Objectives. United Kingdom: Longman.

Simpson, E. (1972). Educational Objectives in the Psychomotor Domain. Washington, D.C.: Gryphon House.

Sneed, O. (2016) "Integrating Technology with Bloom's Taxonomy." Arizona State University Teach Online. Retrieved Dec. 4, 2024 from https://teachonline.asu.edu/2016/05/integrating-technology-blooms-taxonomy/

Stapleton-Corcoran, E. (2023). “Bloom’s Taxonomy of Educational Objectives.” Center for the Advancement of Teaching Excellence at the University of Illinois Chicago. Retrieved Dec. 4, 2024 from https://teaching.uic.edu/blooms-taxonomy-of-educational-objectives/

Wieman, C. E., Rieger, G. W., Heiner, C. E. (2014). Physics exams that promote collaborative learning. Physics Teacher; 52: 51-53.